I’m presently working as a Machine Studying researcher on the College of Iowa, and the precise modality I’m working with is the audio modality. Since I’m simply beginning the venture, I’ve been studying present state-of-the-art papers and different related papers to know the panorama. Contrastive Audio-Visible Masked Autoencoder was work launched by researchers from MIT CSAIL and different AI labs. This paper follows the Masked Auto Encoder and permits for the usage of each the audio and visible modality collectively.

Masked Autoencoder(MAE), which was printed in 2022, was State-of-the-art on the time. The MAE was the principle inspiration for this paper. Moreover, the paper states that previous to their publication, Contrastive Studying and Masked Knowledge Modeling haven’t been used collectively in audio and visible studying. By leveraging these 2 methodologies, the mannequin offered within the paper was in a position to match and outperform SOTA metrics on audio and visible classification.

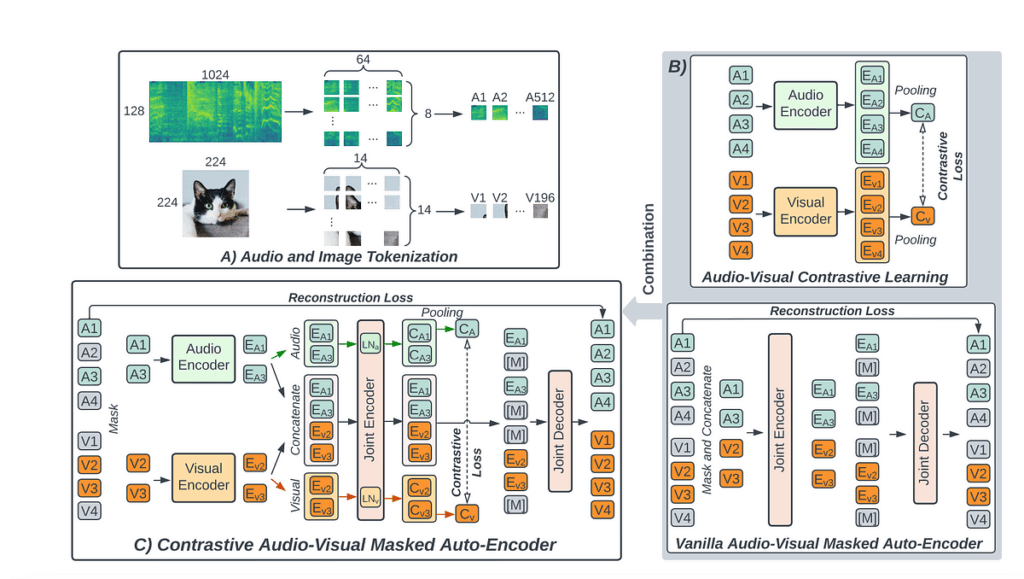

For the visible modality, movies are pre-processed utilizing a vanilla Imaginative and prescient Transformer. For the audio modality, an Audio Spectogram Transformer(AST) is used. AST was launched by the identical group in 2021 and makes use of Transformers with spectrograms for preprocessing. For pre-training and fine-tuning, it’s normal to see audio fashions use 10-second video clips with the corresponding audio clip. For audio, an audio waveform is transformed to a 128-dimensional log mel filterbank with a 25-ms Hanning window each 10 ms. A Hanning Window is a smoothing perform generally utilized in audio processing to filter out spectral leakage. Then the audio is slit into patches of 512 x 16×16 and inputted into the mannequin. As a way to make processing for movies computationally cheaper, the paper used a Body Aggregation Technique. So, in a 10-second video clip, 10 RGB frames are uniformly sampled. For coaching, 1 RGB body is used, however throughout inference, the typical of all of the predictions of the ten RBG frames is used. Every RGB body is cropped and resized into 196 16×16 sq. patches.

Contrastive Audio-Visible Studying consists of an audio and visible pair which might be inputted into impartial audio and visible encoders. Then after being positioned via a linear projection, a contrastive loss is minimized. We’ll go into extra element on how CAV is carried out within the mannequin in a later part.

Masking has been a preferred methodology in self-supervised studying for making a supervisory sign in addition to in supervised studying for knowledge augmentation. Masked Knowledge Modeling: A Main self-supervised framework. Masked autoencoder is one strategy. For an enter pattern, an MAE masks a portion of the enter and solely inputs the unmasked portion right into a Transformer mannequin to “reconstruct” the masked tokens whereas minimizing MSE loss. This enables the transformer to be taught a significant illustration of the enter knowledge.

Then they attempt to mix contrastive studying with an audio-visual masked encoder. They tokenize the audio and visible modalities. Then they added some modality-specific positional embeddings after which added 75% masking. Then, identical to earlier than, they handed via impartial audio and visible encoders. Then they’re collectively handed via an Audio-Visible encoder 3 occasions, every utilizing totally different normalization layers. The audio and visible single modality streams are then used for contrastive studying, and the output of the audio-visual multi-modal stream for reconstruction. After including modality-specific embeddings, they put them via decoders to reconstruct the enter audio and picture. Then they reduce an MSE loss. Lastly, they sum up contrastive loss and reconstruction loss because the loss for the CAV-MAE.

Total, there have been 3 details that the authors have been in a position to conclude with: Contrastive Studying + Masking are complementary and carry out higher collectively than individually. Moreover, multi-modal pertaining permits the mannequin to carry out higher in single-modal duties. Every modality is ready to present extra data to the opposite, somewhat than a binary label. Additionally, when performing audio retrieval given a video, CAV-MAE outperformed each MAE and CAV individually. The ultimate level is that self-supervised studying of the CAV-MAE mannequin is way extra computationally environment friendly in comparison with the opposite SOTA fashions.