Getting again to the place it began, I shall focus on the primary thought of Consideration is all you want, however earlier than that we have to backtrack to earliest concepts for language fashions.

- Language Fashions

- Phrase Representations

- Transformer Mannequin

That is to respect with the precise language mannequin duties comparable to classification, sentiment evaluation, p-o-s tagging, textual content summarisation, query answering and many others.

- Neural Community mannequin (preceded by Perceptron mannequin) | Again-propagation algorithm

- Recurrent Neural Community mannequin

- Lengthy Quick Time period Reminiscence

- Sequence to Sequence

That is with respect to illustration of textual content itself, since we can’t characterize “textual content” as strings themselves, we would have liked to resolve this dilemma of expressing pure language in manner. computer systems can perceive and interpret them.

- TF-IDF

- Bag of Phrases

- Word2Vec — steady bag of phrases and steady skip gram mannequin

Observe: Going over the neural community based mostly language fashions solely on this writing, even earlier than neural networks, we had different types of language fashions comparable to probabilistic fashions(N-gram, Hidden Markov Fashions, Bayesian Language fashions), Dictionary based mostly(Finite State Automata, Grammar-based parsers, lexicon engines) and Hybrid fashions.

Ranging from Neural Community fashions, as we all know they absorb knowledge from enter layer, passes by means of hidden layers, offers an output, we examine it with true label, carry out back-propagation algorithm to replace inside weights in order to minimise the error between output label and true label.

Nevertheless a key concern with this mannequin being not capable of perceive the character of pure language, human language by nature is sequential, after we use an above easy neural community mannequin, it fails to seize the sequential nature of language therefore doesn’t present good understanding of language itself.

To beat this concern, Recurrent Neural Networks got here into play, these fashions work just like Neural Community in context of ahead and backward-propagation, nonetheless the important thing aspect right here is, we now ship enter with respect to time, one phrase at a time, we feed into the mannequin and at every time step, we obtain an output from the mannequin.

Each X_i is enter at time step i, each O_i is output from mannequin at time step i.

The coaching of Recurrent Neural Networks works by the ultimate output Y, in contrast with true label from coaching knowledge, once more we carry out back-propagation algorithm which first updates A_l (the final weights in mannequin) with respect to error gradient then A_l-1, A_l-2,,,, and so forth till A_1.

As we see there stands one other concern with this mannequin, which is vanishing gradient downside, if the error between true label and output label could be very small, the gradient of that error will likely be small as nicely, in flip when the weights are updates within the final layers, the burden gradient till the preliminary layers could be very small, therefore the replace is much less important. RNNs battle with this downside the place the data of error gradient turns into misplaced in coaching of mannequin.

To beat yet one more downside, there was improvement of Lengthy Quick Time period Reminiscence fashions, LSTMs launched completely different gates to a node in neural community, these gates included overlook gate, enter gate and output gate. In abstract overlook gate handled (forgetting part of reminiscence from node), enter gate(what a part of reminiscence needs to be enter), and output gate(what a part of reminiscence to be output from cell). This intuitive mannequin carried out the concept throughout coaching whereby for RNNs we utterly replace the reminiscence in neural community, in LSTM we hold a bit of reminiscence and replace as required, nonetheless with the reminiscence half retained, to some extent we will not undergo from vanishing gradient downside within the mannequin.

Within the area of LSTM, an vital mannequin referred to as Sequence to Sequence got here into play, the thought was to make use of one LSTM to learn the enter sequence, one step at a time to acquire large-fixed dimensional vector illustration, after which to make use of one other LSTM to extract the output sequence from the vector, the second LSTM is actually a RNN language mannequin besides that’s conditioned on enter sequence.

Nevertheless the factor to note is, even with the introduction of gates the issue of vanishing gradient was not utterly eradicated attributable to a following causes:

- sigmoid non-linearity ; when inputs to gates are very small or giant, they will vaish underneath sigmoid perform

- multiplicative shrinks; within the mannequin of LSTMas we see weights are multiplied over overlook gate-previous cell state, if these values are very small, they shrink over time.

- in follow, we truncate back-propagation although time, i.e error gradient don’t every preliminary cells limiting long run reminiscence

Seq2Seq mannequin launched the muse of encoder-decoder structure of language fashions, having the thought of 1 “encoder” to encode the enter knowledge in sure type, passing by means of mannequin and “decoder” to decode the processed vector into human pure language.

One of many earliest phrase illustration methodology was TF-IDF (Time period Frequency-Inverse Doc Frequency) it helped us compute the relevancy of a phrase in a textual content, or how vital a phrase is. It was utilized in duties comparable to info retrivial, fundamental textual content evaluation and textual content summarization. Nevertheless it doesn’t seize the semantic that means of phrases effectively.

Bag of Phrases is one other approach used the place we compute the worth of phrase the place every vector would additionally characterize the depend of phrases in a doc.

Nonetheless the problem of capturing semantic that means of phrases have been lacking, in 2013, Word2Vec was developed by Google, It carried out one main thought which crammed the hole of phrase illustration, which was phrases comparable to one another in context needs to be nearer in vector illustration. which meant phrases like “faculty” “trainer” “pupil” needs to be semantically nearer in vector illustration and phrases like “warfare” and “pizza” needs to be far-off.

It makes use of the cosine similarity metric to measure semantic similarity. Cosine similarity is the same as cos the place the angle is measured between the vector illustration of two phrases.

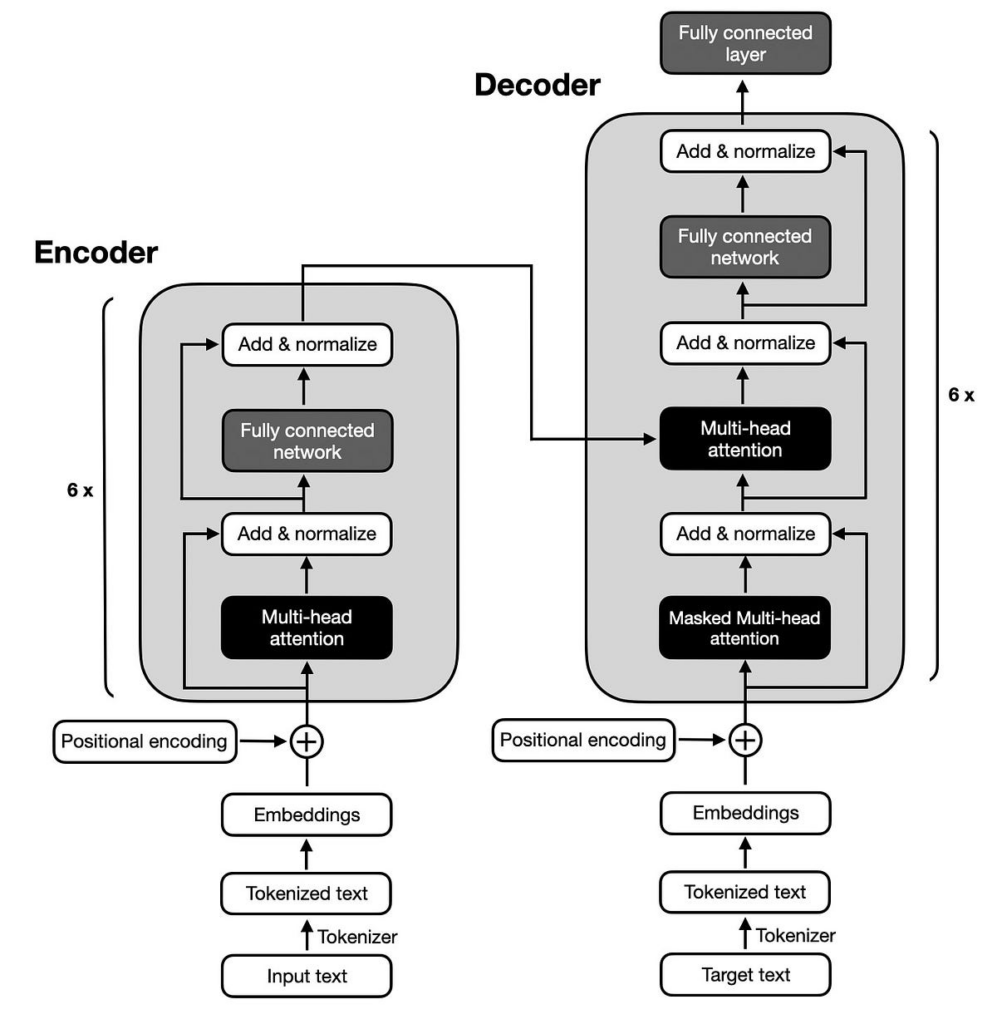

Now constructing upon these foundations, we will focus on the core thought of Transformer mannequin, the 2 main breakthroughs of Transformer contains:

- Parallelization — in all earlier fashions now we have seen, we enter textual content with respect to time step, one by one, quite the opposite for transformers we enter textual content in parallel vogue. Since there is no such thing as a notion of “which phrase is available in sequence?” we use positional embeddings to know the order of phrases.

- Consideration Mechanism — the “consideration” to a output phrase t, is determined by consideration for all of the enter phrases earlier than t. For each output phrase, mannequin asks “which phrase in sentence ought to I take note of when making sense of the present phrase”

The core consideration mechanism is constructed on three sections:

- Question (Q) — what this phrase is asking?

- Key(Okay) — what every phrase provides?

- Worth(V) — the precise info every phrase gives.

This Consideration Rating offers us the context of every phrase, and the way a lot consideration now we have to pay for each phrase relying on the sentence.

In transformers we use Multi-Head Self consideration which permits us to have a look at sentence from completely different views (grammar, that means).

The transformer makes use of multi-head consideration in 3 ways:

- In “encoder-decoder” layers, queries come from earlier decoder layers and keys and values from output of encoder layer, this enables each place in decoder to attend over all positions from enter sequence (just like seq2seq mannequin)

- Every place in encoder can attend to all positions in earlier layer of encoder (the primary thought of consideration to all earlier phrases)

- Equally, self consideration in decoder permits every place in decoder to take care of all positions in decoder as much as and together with present place.

Phrase Illustration in Transformers

It has two main elements:

- Token Embeddings — what a phrase means

- every token “whats up” “un-” “##ys” is mapped to a set dimension vector.

- these vectors are realized throughout coaching just like word2vec however with context and task-specific supervision, it offers completely different vectors for similar phrase relying on context.

- Positional Embeddings — the place is the phrase in sentence.

Collectively Token and Positional embeddings are enter to encoder of Transformer mannequin.

Why the thought of self consideration grew to become so revolutionary?

- whole computation complexity per layer O(n²) (nonetheless elevated as in comparison with RNN/LSTM O(n)) every token will get a world view of complete sequence and never only a window.

- quantity of computation can now be parallelized, as measured by minimal variety of sequential operations required, transformers may be educated a lot quicker, utilizing GPU parallelism

- the trail size between lengthy vary dependencies (which was a giant concern in RNN/LSTMs; the data go was step-by-step which resulted in vanishing gradient downside) nonetheless in transformers every token immediately attends to each different token which makes lengthy vary dependencies a lot simpler, therefore utterly eradicating the problem of vanishing gradient downside.

One attention-grabbing factor to notice is that the authentic Transformer structure was designed with each an encoder and a decoder. However at this time’s hottest language fashions typically use just one a part of that structure — both simply the encoder, or simply the decoder, relying on the duty.

Full structure utilized in primarily machine translation, sequence to sequence duties.

Encoder solely ; bidirectional (sees each left and proper context) — BERT, RoBERTa, DistillBERT; textual content classification, named entity recognition, query answering and many others.

Decoder solely; autoregressive, one token at a time utilizing earlier token as context — GPTs, LLaMA, Claude; language technology, summarisation, code technology.

- Consideration is all you want — https://arxiv.org/pdf/1706.03762

- LSTM — https://dl.acm.org/doi/10.5555/2998981.2999048

- Sequence2Squence — https://arxiv.org/abs/1409.3215

- Word2Vec — https://arxiv.org/abs/1301.3781