In earlier articles, we targeted on descriptive statistics and summarizing knowledge. Now we dive into chance, the glue that holds inferential statistics collectively. We’ll additionally discover frequent distributions (with a highlight on the conventional distribution) and wrap up with sensible knowledge preprocessing strategies — displaying how idea meets real-world duties like dealing with lacking values, detecting outliers, and scaling options for modeling.

Likelihood helps us quantify uncertainty — the chance that an occasion will happen. In knowledge science, it underpins duties like predictive modeling, danger evaluation, and statistical inference.

1.1 Frequentist vs. Bayesian Interpretation

- Frequentist:

Views chance as a long-run frequency. For example, if a coin is truthful, the chance of heads is 0.5 as a result of, over many trials, heads will seem 50% of the time. - Bayesian:

Interprets chance as a diploma of perception. You replace this perception as new info arrives. For instance, for those who see the primary 10 coin flips are heads, a Bayesian would possibly recalculate the percentages the coin is biased — adjusting their prior chance with new proof.

1.2 Easy Likelihood Examples

Cube: Likelihood of rolling a 4 with a good six-sided die:

Playing cards: Likelihood of drawing an Ace from an ordinary 52-card deck:

Coin Tosses: Likelihood of getting two heads in two flips:

1.3 Likelihood Distributions (Overview)

A chance distribution describes how possibilities are distributed over all doable outcomes of a random variable. Examples embrace:

- Discrete: Binomial, Poisson, Geometric

- Steady: Regular (Gaussian), Exponential, and so on.

Although many distributions exist, the regular (Gaussian) distribution is paramount in statistics.

2.1 Regular (Gaussian) Distribution

- Bell Curve: Symmetric across the imply.

- Parameters: Described totally by its imply μμ and normal deviation σσ.

- Why central? The Central Restrict Theorem states that the sums (or averages) of many impartial random variables are usually usually distributed, even when the unique variables will not be regular.

It’s generally utilized in speculation testing, confidence intervals, and regression evaluation.

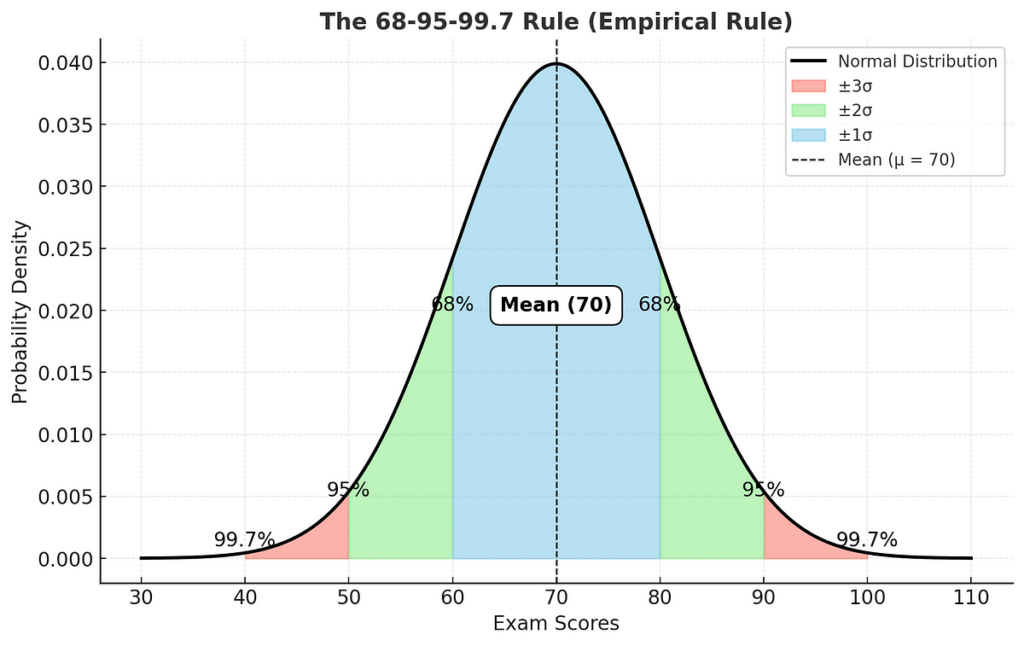

2.2 The 68–95–99.7 Rule (Empirical Rule)

For a regular distribution:

- ~68% of knowledge falls inside ±1σ of the imply.

- ~95% inside ±2σ.

- ~99.7% inside ±3σ.

Instance: If examination scores in a category are usually distributed with a imply of 70 and an ordinary deviation of 10:

- About 68% of scholars scored between 60 and 80.

- About 95% scored between 50 and 90.

- Nearly all (99.7%) scored between 40 and 100.

Actual-world knowledge isn’t “clear.” Points like lacking values, outliers, and inconsistent scales can derail your fashions. Preprocessing tackles these issues so you’ll be able to reliably analyze knowledge and construct fashions.

3.1 Standardization (Z-score)

Remodel every characteristic so it has a imply of 0 and an ordinary deviation of 1.

- When to Use: Many machine studying algorithms assume knowledge is centered round 0.

- Useful for strategies delicate to scale (e.g., SVM, KNN, linear regression).

- Outlier Sensitivity: Standardization can nonetheless be influenced by outliers, because the imply and normal deviation can shift considerably if outliers are current.

3.2 Normalization (Min-Max Scaling)

Scales every characteristic to a fastened vary, usually [0, 1].

- When to Use: Helpful in picture processing or situations the place you want values strictly in [0, 1].

- Simplifies interpretability in case your options span vastly totally different ranges.

- Outlier Sensitivity: Very delicate to outliers, since max(x)max(x) or min(x)min(x) is likely to be an excessive worth.

Tip: Generally sturdy scalers (e.g., utilizing the median and IQR) are employed if outliers are a critical concern.

4.1 Strategies for Addressing NAs (Not Out there / Null / NaN)

Dropping:

- Row-wise: Take away rows that comprise lacking knowledge.

- Column-wise: Take away total columns if the lacking fee is just too excessive.

- Draw back: Probably shedding numerous knowledge, which may bias outcomes.

Filling (Imputation):

- Imply/Median Imputation: Substitute lacking values with the characteristic’s imply or median.

- Mode Imputation (for categorical knowledge).

- Interpolation (time sequence): Predict lacking factors based mostly on tendencies in neighboring factors.

- Superior Strategies: KNN imputation, MICE (A number of Imputation by Chained Equations), model-based imputations.

Go away as a Separate Class:

- For categorical variables, generally you’ll be able to deal with “lacking” as its personal class if it conveys a significant separation (e.g., “unknown”).

Instance

- In case your dataset has 2% lacking “wage” values, you would possibly select imply imputation. Whether it is 50% lacking, think about dropping the column or utilizing superior imputation, relying on the context of the issue.

Outliers are knowledge factors considerably totally different from the remainder. They will skew abstract statistics and degrade mannequin efficiency.

5.1 IQR Technique

Recall:

Factors are outliers in the event that they lie under Q1−1.5×IQR or above Q3+1.5×IQR.

- Professionals: Simple, broadly used (in field plots).

- Cons: Inflexible rule of thumb, is likely to be too strict/lenient in sure distributions.

5.2 Z-score Technique

- Threshold: Knowledge factors with ∣z∣>2 or ∣z∣>3 are sometimes thought-about outliers.

- Professionals: Good for (close to) regular distributions.

- Cons: Much less sturdy if the distribution is closely skewed.

5.3 Visible Strategies

Field Plot

Outliers seem as factors past the whiskers.

Scatter Plot

Outliers seem as factors removed from the principle cluster.

5.4 Superior Outlier Detection Strategies

For advanced, high-dimensional, or non-normal knowledge, superior fashions enhance detection:

Isolation Forest (IF)

Detects anomalies by randomly partitioning knowledge and measuring how shortly a degree turns into remoted.

- Professionals: Works nicely in excessive dimensions, environment friendly for big datasets.

- Cons: Hyperparameter tuning required for sensitivity management.

Native Outlier Issue (LOF)

Compares the native density of a knowledge level to its neighbors.

- Professionals: Efficient for density-based anomalies (e.g., fraud detection).

- Cons: Delicate to parameter settings.

One-Class SVM

Makes use of Assist Vector Machines (SVMs) to be taught a call boundary for “regular” knowledge.

- Professionals: Works nicely in non-linear, structured datasets.

- Cons: Computationally costly, wants cautious tuning.

Autoencoders (Neural Networks)

Prepare a neural community to reconstruct regular knowledge; excessive reconstruction error signifies anomalies.

- Professionals: Nice for advanced, non-linear outliers (e.g., picture or time-series anomalies).

- Cons: Requires massive coaching knowledge, computationally costly.

Let’s mix these ideas with a mini workflow:

6. 1 Load Your Knowledge

- Examine for lacking values, inconsistent knowledge sorts, and weird patterns.

- Use abstract statistics (

df.describe()) and visualizations (histograms, pair plots, and so on.) to know distributions and relationships.

6.2 Deal with Lacking Values

- If knowledge is minimal, think about dropping. In any other case, impute with imply/median or superior strategies.

6.3 Detect Outliers

- Use field plots to visualise outliers.

- Apply the IQR or z-score approach to establish them.

- Resolve whether or not to take away, cap, or remodel them based mostly on area data.

6.4 Scale Your Options (if wanted)

- Standardize if you need a imply of 0 and normal deviation of 1.

- Normalize for those who want [0, 1] scaling and outliers aren’t excessive.

- Contemplate sturdy scalers if outliers stay an issue.

6.5 Verify Distribution

- If options approximate the regular distribution, you’ll be able to leverage the 68–95–99.7 rule for fast insights.

- For closely skewed options, transformations (e.g., log) would possibly assist.

6.6 Function Engineering and Choice

- Create significant options (e.g., Date → Extract Yr, Month).

- Drop redundant options (e.g., extremely correlated ones).

- Use area data to refine and choose the perfect predictors.

Think about you’ve got month-to-month gross sales knowledge (in $) for a small enterprise:

Likelihood Fundamentals

- You would possibly estimate the chance of exceeding $10,000 in a given month — use historic knowledge to gauge frequency (Frequentist) or incorporate skilled forecasts (Bayesian).

Distribution Verify

- In case your gross sales seem roughly bell-shaped, you’ll be able to apply the 68–95–99.7 rule to gauge typical ranges.

Preprocessing

- Some months have lacking knowledge, so that you impute them utilizing the median (sturdy if outliers exist).

- You discover a few extraordinarily excessive gross sales months (vacation boosts) seem as outliers — z-score identifies them as past 3 normal deviations. You determine whether or not to hold them or deal with them individually.

Scaling

- In case you plan to run a machine studying mannequin that’s delicate to scale (like an SVM), you apply standardization to every month’s gross sales earlier than coaching.