Vacation scatter plot tells us that when there may be vacation transaction density is excessive however excessive gross sales worth transactions will be discovered throughout working days.

Similar goes for Low cost, the place low cost days encourages folks to spend extra in comparison with common transaction days.

Speculation testing offers us statistical proof with sure confidence about our assumption on the info.

χ² check:

Following code performs χ² check for given class mixtures and returns if the 2 classes are depending on one another or not.

Null Speculation H0 = Classes are impartial of one another

Alternate Speculation H1 = Classes are dependent

from scipy.stats import chi2_contingencydef chi2test(knowledge, category1, category2, alpha=0.05):

knowledge = knowledge.groupby(by=[category1, category2]).agg({'Order':'sum', 'Gross sales':'sum'}).reset_index()

check = chi2_contingency(knowledge.pivot(index=category1,columns=category2,values='Order').fillna(0))

order_dependency = check.pvalue if order_dependency:

print(f'Reject the Null Speculation. For Order quantity, {category1} and {category2} are dependent', finish=" | ")

else:

print(f'Fail to reject the Null Speculation. For Order quantity, {category1} and {category2} are impartial', finish=" | ")

print(f'Check statistics:{check.statistic},tp-value:{check.pvalue}')

check = chi2_contingency(knowledge.pivot(index=category1,columns=category2,values='Gross sales').fillna(0))

sales_dependency = check.pvalue if sales_dependency:

print(f'Reject the Null Speculation. For Gross sales quantity, {category1} and {category2} are dependent', finish=" | ")

else:

print(f'Fail to reject the Null Speculation. For Gross sales quantity, {category1} and {category2} are impartial', finish=" | ")

print(f'Check statistics:{check.statistic},tp-value:{check.pvalue}')

return {'C1':category1, 'C2':category2,'Order': order_dependency, 'Gross sales': sales_dependency}

# Carry out Chi-Squared check for class mixtures

columns = ['Store_Type', 'Location_Type', 'Region_Code', 'Holiday', 'Discount', 'MonthName', 'DayName']

dependancy_summary = pd.DataFrame([chi2test(train,c1,c2) for c1,c2 in list(permutations(columns,2))])

Results of the χ² check is transformed into dependency desk the place True signifies that class pair depends.

Imply Similarity Check:

Following code performs imply similarity check. If performs ANOVA if knowledge fulfill assumptions for it in any other case performs TukeyHSD to test is all classes have similar imply or not. It additionally returns record of pairs having similar imply and completely different imply.

Null Speculation H0 = All classes have similar imply

Alternate Speculation H1 = There may be atleast as soon as class having completely different imply

from scipy.stats import f_oneway, kruskal, anderson, levene

from statsmodels.stats.multicomp import pairwise_tukeyhsd

from itertools import mixturesdef decorator(func):

def wrapper(*args, **kwargs):

print('~'*100)

print('~'*100)

end result = func(*args, **kwargs)

print('~'*100)

print('~'*100)

return end result

return wrapper

@decorator

def variance_test(knowledge, class, goal, alpha=0.05):

d = knowledge.groupby(by=class).agg(Imply=(goal,'imply'), Rely=(goal, 'dimension')).reset_index()

print(f'Speculation check whether or not Imply {goal} is similar for all {class} or not.n')

print(d)

print('='*53)

cats = sorted(datasklearn.distinctive())

teams = {}

for cat in cats:

teams[cat]=knowledge[datasklearn == cat][target]

# Verify for Normality check of all classes

normality_test = True

print('Standards test for ANOVA')

for cat,group in teams.objects():

if not anderson(group).fit_result.success:

normality_test = False

print(f'�33[31m u274C Group {cat} is not normally distributed.�33[0m')

break

if normality_test:

print('�33[32m u2705 All groups are normally distributed.�33[0m')

# Check for levene test

levene_test = True

_, p_levene = levene(*groups.values())

if p_levene levene_test = False

print(f'�33[31m u274C Variance of all groups are not same.�33[0m')

else:

print(f'�33[32m u2705 Variance of all groups are same.�33[0m')

# Perform One-way ANOVA if criteria meets otheriwse perform Kruskal

if normality_test and levene_test:

print('One-Way ANOVA will be performed.')

_, p_value = f_oneway(*groups.values())

else:

print('All criterias not met for ANOVA. Kruskal test will be performed.')

_, p_value = kruskal(*groups.values())

# Proceed for ttest_ind if one group has different mean

if p_value > alpha:

print(f'p-Value is {p_value} > {alpha} Significance level.nWe dont have enough evidence to reject the Null Hypothesis. All means are same.')

print('='*53)

return None

else:

print(f'p-Value is {p_value} print('='*53)

tukey = pairwise_tukeyhsd(endog=data[target], teams=datasklearn, alpha=0.05)

print(tukey)

# Extract group1 and group2 utilizing the Tukey object attributes

group1 = tukey.groupsunique[tukey._multicomp.pairindices[0]]

group2 = tukey.groupsunique[tukey._multicomp.pairindices[1]]

pair = [f'{a}-{b}' for a,b in list(zip(group1, group2))]

reject = tukey.reject

# Mix group1 and group2 right into a DataFrame

group_pairs = pd.DataFrame({'pair': pair, 'reject': reject})

same_mean_pairs = group_pairs[group_pairs['reject'] == False]['pair']

different_mean_pairs = group_pairs[group_pairs['reject'] == True]['pair']

print(f'�33[34mPairs having different {target} mean are: {",".join(different_mean_pairs.values)}')

print(f'�33[35mPairs having same {target} mean are: {",".join(same_mean_pairs.values)}�33[0m')

return None

Two such examples are shown below.

To prepare the data for model building we have considered aggregation and table transformation using pandas standard pd.crosstab() method and then join all the tables on date index to have one single table which can be used to train the models. We are going to use SARIMAX family of algorithms from statsmodels package to train our forecasting model.

# Data Preperation for modeling

# Data for Sales forecastig model training

overall_sales = train.groupby(level=0).agg({'Sales':'sum'})

id_wise_sales = pd.crosstab(index=train.index, columns=train.Store_id, values =train.Sales, aggfunc='sum')

store_type_wise_sales = pd.crosstab(index=train.index, columns=train.Store_Type, values =train.Sales, aggfunc='sum')

location_wise_sales = pd.crosstab(index=train.index, columns=train.Location_Type, values =train.Sales, aggfunc='sum')

region_wise_sales = pd.crosstab(index=train.index, columns=train.Region_Code, values =train.Sales, aggfunc='sum')# Data for Order forecastig model training

overall_order = train.groupby(level=0).agg({'Order':'sum'})

id_wise_order = pd.crosstab(index=train.index, columns=train.Store_id, values =train.Order, aggfunc='sum')

store_type_wise_order = pd.crosstab(index=train.index, columns=train.Store_Type, values =train.Order, aggfunc='sum')

location_wise_order = pd.crosstab(index=train.index, columns=train.Location_Type, values =train.Order, aggfunc='sum')

region_wise_order = pd.crosstab(index=train.index, columns=train.Region_Code, values =train.Order, aggfunc='sum')

# Create a Single DataFrame for Sales and Order

train_sales = pd.concat([overall_sales, id_wise_sales, store_type_wise_sales, location_wise_sales, region_wise_sales], axis=1)

train_order = pd.concat([overall_order, id_wise_order, store_type_wise_order, location_wise_order, region_wise_order], axis=1)

exog_train_holiday = prepare.groupby(prepare.index).imply('Vacation')['Holiday']

exog_test_holiday = check.groupby(check.index).imply('Vacation')['Holiday']

Closing desk seems like this and right here every column can be educated individually.

Earlier than continuing to mannequin constructing understanding about stationarity of the info and ACF/PACF chart can provide us good thought about hyperparameter choice.

Dickey-Fuller Check:

Dickey-fuller check is statistical methodology to test whether or not knowledge is stationary or not. Following code snippet performs augmented dickey-fuller check and prints if the timeseries is stationary or not.

def adf_test(dataset):

print(f'Outcomes of Dickey-Fuller Check:')

for column in dataset.columns:

pvalue = adfuller(dataset[column])[1]

if pvalue print(f'�33[32mTimeseries for "{column}" is stationary', end='.t')

else:

print(f'�33[31mTimeseries for "{column}" is not stationary', end='.')

print(f'tp-value is {pvalue}�33[0m')

ACF/PACF Chart:

We can find probable values of hyperparameter “p” and “q” from ACF and PACF charts. Cut-off point in PACF gives us basic idea about auto regression order p, whereas cut-off point in ACF gives idea about moving average order q. Looking from ACF/PACF charts for all proposed timeseries we can conclude that “p” is 1 and “q” can be either 1 or 2.

def acf_pacf_plot(series_sales:pd.Series,series_order:pd.Series)->None:

fig, (ax1, ax2, ax3, ax4) = plt.subplots(1, 4, figsize=(14, 5))

# Plot Sales ACF in first cell

plot_acf(series_sales, ax=ax1)

ax1.set_title(f'ACF for {series_sales.name} Sales')

# Plot Sales PACF in second cell

plot_pacf(series_sales, ax=ax2)

ax2.set_title(f'PACF for {series_sales.name} Sales')# Plot Sales ACF in first cell

plot_acf(series_order, ax=ax3)

ax3.set_title(f'ACF for {series_sales.name} Order')

# Plot Sales PACF in second cell

plot_pacf(series_order, ax=ax4)

ax4.set_title(f'PACF for {series_sales.name} Order')

# Adjust layout

plt.tight_layout()

plt.show()

acf_pacf_plot(train_sales["R1"], train_order["R1"])

Seasonality:

Seasonality chart will be generated utilizing following code after decomposing the mannequin. It offers us thought about seasonal parameter “S” in mannequin coaching.

from statsmodels.tsa.seasonal import seasonal_decomposedef seasonal_chart(series_sales, series_order):

fig, (ax1, ax2) = plt.subplots(1, 2, figsize=(14, 5))

# Plot Gross sales Seasonality in first cell

end result = seasonal_decompose(series_sales, mannequin='additive', interval=None)

end result.seasonal.plot(ax=ax1)

ax1.set_title(f'Seasonality for {series_sales.title} Gross sales')

# Plot Order Seasonality in second cell

end result = seasonal_decompose(series_order, mannequin='additive', interval=None)

end result.seasonal.plot(ax=ax2)

ax2.set_title(f'Seasonality for {series_order.title} Order')

# Modify format

plt.tight_layout()

plt.present()

seasonal_chart(train_sales["L3"], train_order["L3"])

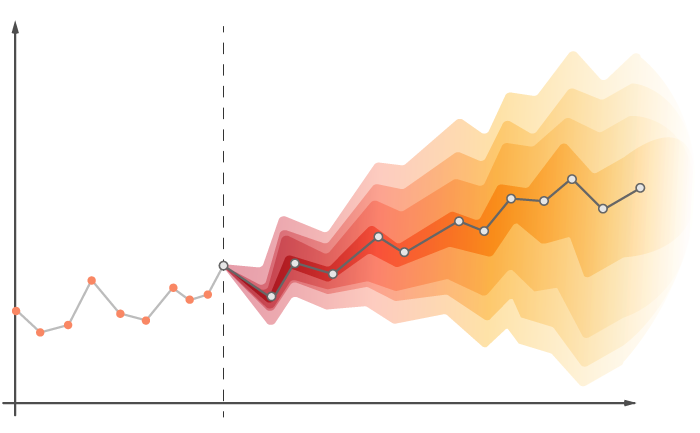

Practice-test cut up:

In timeseries we can not cut up knowledge randomly for prepare and check operations. Out of all date sensible sorted rows we have to take into account first 80% (or no matter proportion we resolve) knowledge as prepare and tailing 20% knowledge as check. As an alternative of doing this exercise manually everytime we are able to outline a category which can carry out splitting knowledge into requored porportion with single line command.

from sklearn.base import BaseEstimator, TransformerMixinclass TimeSeriesSplitter(BaseEstimator, TransformerMixin):

def __init__(self, test_size=0.2):

self.test_size = test_size

self.train_data, self.test_data = None, None

def match(self, X, y=None):

"""No becoming required; only for compatibility."""

return self

def remodel(self, X, y=None):

"""Break up the single-column time sequence into prepare and check units."""

n_rows = len(X)

split_index = int(n_rows * (1 - self.test_size))

self.train_data = X.iloc[:split_index].asfreq('D')

self.test_data = X.iloc[split_index:].asfreq('D')

return self.train_data, self.test_data

Mannequin Coaching:

Mannequin coaching is most important stage in time sequence forecasting. As SARIMAX will not be normal scikit-learn algorithm household, we’ve developed little one class which can carry out operations like match, predict and rating.

from statsmodels.tsa.statespace.sarimax import SARIMAX

from sklearn.base import BaseEstimator

from sklearn.metrics import (make_scorer, mean_squared_error as mse, mean_absolute_error as mae, mean_absolute_percentage_error as mape)class SARIMAXEstimator(BaseEstimator):

def __init__(self, order=(1,0,1), seasonal_order = (1,0,1,12)):

self.order = order

self.seasonal_order = seasonal_order

self.model_ = None

def match(self, X, exog=None):

self.exog_train=exog

strive:

if isinstance(self.exog_train, pd.Sequence):

self.model_ = SARIMAX(X, exog=self.exog_train, order=self.order, seasonal_order=self.seasonal_order).match(disp=False)

else:

self.model_ = SARIMAX(X, order=self.order, seasonal_order=self.seasonal_order).match(disp=False)

besides Exception as e:

print(f"Skipping: order={self.order}, seasonal_order={self.seasonal_order}. Error: {e}")

self.model_ = None

return self

def predict(self, n_steps, exog=None):

if self.model_ is None:

return np.full(n_steps, 1E-10)

strive:

if not isinstance(self.exog_train, pd.Sequence):

return self.model_.forecast(steps=n_steps)

elif isinstance(exog, pd.Sequence):

return self.model_.forecast(steps=n_steps, exog=exog[:n_steps])

else:

increase ValueError('No exog knowledge supplied')

besides Exception as e:

print(e)

return None

def rating(self, X, exog=None):

n_steps = len(X)

predictions = self.predict(n_steps, exog)

return mape(X, predictions)

A number of prepare run with ML Circulate and Mannequin choice:

With the assistance of MLFlow bundle we are able to do experiment monitoring which helps use to save lots of time and avoids repetition of similar job.

mlflow.set_experiment("Sore ID Gross sales Forecasting-1.1.0")

for column in train_sales.columns:

splitter = TimeSeriesSplitter()

X_train, X_test = splitter.fit_transform(train_sales[column])

X_train_exog, X_test_exog = splitter.fit_transform(exog_train_holiday)

for order, seasonal in param_grid:

with mlflow.start_run():

sarimax_estimator = SARIMAXEstimator(order=order, seasonal_order=seasonal)

sarimax_estimator.match(X=X_train, exog=X_train_exog)

model_score = sarimax_estimator.rating(X=X_test, exog=X_test_exog)

mlflow.set_tag('knowledge', column)

mlflow.log_params({'order':order, 'seasonal_order':seasonal})

mlflow.log_metric('mape',model_score)

mlflow.sklearn.log_model(sarimax_estimator.model_, 'mannequin')

This code trains mannequin on given set of hyper parameters and save them on ML FLow UI server the place we are able to see and evaluate a number of fashions at a look for various mixture of inputs.