Hello, I’m Bram. I’ve cherished programming and electronics ever since I used to be a child. Now, as an grownup, I work as a enterprise and knowledge analyst in a producing firm, the place I visualize knowledge utilizing PowerBI and create sensible automation scripts with Python.

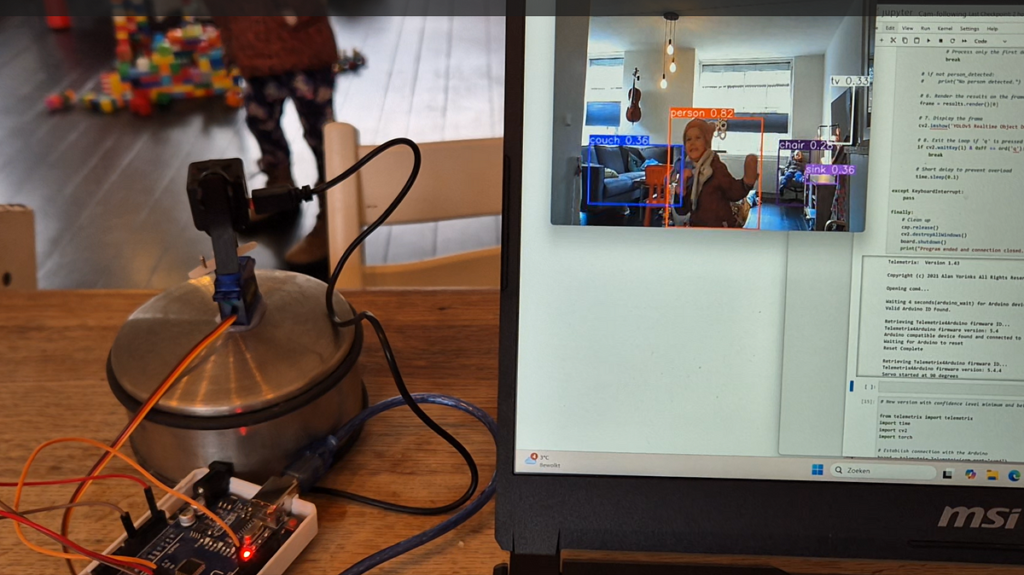

Applied sciences like Machine Studying and AI fascinate me. This mission brings collectively all these elements: programming with Python, using the newest strategies in object recognition, and interfacing with electronics — particularly, a servo that strikes the digicam.

On this mission, we’ll discover how one can use AI instruments like YOLOv5 with Python, and interface with Arduino utilizing Telemetrix. We’ll exhibit how one can acknowledge and observe objects, similar to an individual or pet, in real-time video footage from a webcam, and modify the digicam’s place accordingly.

The script permits the webcam to detect and observe objects similar to an individual or pet. When the item strikes out of the middle of the body, a servo adjusts the place of the digicam to maintain the item centered.

- Webcam: Captures real-time video.

- Arduino: Interfaces with the servo motor.

- Servo Motor: Adjusts the digicam place based mostly on object detection.

- Python Libraries: YOLOv5 for object recognition and Telemetrix for Arduino management.

First, set up a connection between your Arduino and pc utilizing the Telemetrix library.

Set up the Telemetrix module script in your Arduino board with the Arduino IDE, I adopted the unique handbook on:

https://mryslab.github.io/telemetrix/telemetrix4arduino/

Correctly join the servo to the Arduino:

- Sign (yellow/white): to digital pin (e.g., pin 9)

- Energy (purple): to 5V pin

- Floor (black/brown): to GND pin

Set up Telemetrix in Python with: pip set up telemetrix

Test if python can join along with your Arduino and management the servo:

from telemetrix import telemetrixSERVO_PIN = 9

board = telemetrix.Telemetrix(com_port="com4")

board.set_pin_mode_servo(SERVO_PIN, 100, 3000)

time.sleep(.2)

board.servo_write(SERVO_PIN, 90)

board.shutdown()

This script runs on Home windows assuming your Arduino is linked to Com port quantity 4. (Test your pc System Supervisor for the fitting port).

The Servo PIN is the pin quantity the place the Yellow or Orange wire is linked to.

The servo strikes to place 90 after which the reference to the board can be closed.

Congratulations, the primary half: controlling Arduino Servo with a Python script is completed!

We are going to use the YOLOv5 mannequin for object recognition. This mannequin can determine numerous objects in real-time.

Set up the library’s: pip set up torch opencv-python yolov5

The next script exhibits you realtime video from the pc cam with the detected objects till you press the ‘Q’ key.

import cv2

import torch# 1. Load the YOLOv5 mannequin

mannequin = torch.hub.load('ultralytics/yolov5', 'yolov5s', pretrained=True)

# 2. Begin the webcam

camera_dev_nr = 1

cap = cv2.VideoCapture(camera_dev_nr)

whereas True:

# 3. Learn a body from the webcam

ret, body = cap.learn()

if not ret:

break

# 4. Carry out object detection

outcomes = mannequin(body)

# 5. Render the outcomes on the body

body = outcomes.render()[0]

# 6. Show the body

cv2.imshow('YOLOv5 Realtime Object Detection', body)

# 7. Break the loop if the person presses 'q'

if cv2.waitKey(1) & 0xFF == ord('q'):

break

# 8. Clear up

cap.launch()

cv2.destroyAllWindows()

The following script places all of it collectively and combines the picture object recognition and the servo to regulate the digicam place so the item is within the heart. The objects to comply with are set to ‘particular person’, ‘cat’ and ‘canine’. Though I solely have cats I came upon that typically a cat was recognized as a canine.

from telemetrix import telemetrix

import time

import cv2

import torch# Set up reference to the Arduino

board = telemetrix.Telemetrix(com_port="com4")

# Outline the servo pin

servo_pin = 9 # Modify this to the pin you are utilizing

# Initialize the servo on the required pin

board.set_pin_mode_servo(servo_pin, 100, 3000)

# Beginning place of the servo

angle = 90

board.servo_write(servo_pin, angle)

print(f"Servo began at {angle} levels")

min_angle = 15

max_angle = 200

# Minimal confidence threshold for detection

MIN_CONFIDENCE = 0.6

# 1. Load the YOLOv5 mannequin

mannequin = torch.hub.load('ultralytics/yolov5', 'yolov5s', pretrained=True)

# 2. Begin the webcam

camera_dev_nr = 1

cap = cv2.VideoCapture(camera_dev_nr)

frame_width = int(cap.get(3))

frame_center_x = frame_width // 2

# Tolerance for centering

CENTER_TOLERANCE = 50 # pixels

strive:

whereas True:

# 3. Learn a body from the webcam

ret, body = cap.learn()

if not ret:

break

# 4. Carry out object detection

outcomes = mannequin(body)

# 5. Extract detections

detections = outcomes.xyxy[0] # tensor with detections

object_detected = False

for *field, confidence, cls in detections:

class_name = outcomes.names[int(cls)]

if class_name in ['person', 'cat', 'dog'] and confidence >= MIN_CONFIDENCE:

object_detected = True

x_min, y_min, x_max, y_max = map(int, field)

# Calculate the midpoint of the bounding field

object_center_x = (x_min + x_max) // 2

# Calculate offset from the middle

offset = object_center_x - frame_center_x

# print(f"Offset: {offset} pixels")

# Test if the item is centered

if abs(offset) > CENTER_TOLERANCE:

# Decide route to rotate servo

if offset # Object is to the left of the middle, rotate servo left

angle = min(angle + 5, max_angle)

else:

# Object is to the fitting of the middle, rotate servo proper

angle = max(angle - 5, min_angle)

# Replace servo place

board.servo_write(servo_pin, angle)

# print(f"Servo moved to {angle} levels")

# else:

# print("Object is centered.")

# Course of solely the primary detected object

break

# if not object_detected:

# print("No object detected.")

# 6. Render the outcomes on the body

body = outcomes.render()[0]

# 7. Show the body

cv2.imshow('YOLOv5 Realtime Object Detection', body)

# 8. Exit the loop if 'q' is pressed

if cv2.waitKey(1) & 0xFF == ord('q'):

break

# Quick delay to forestall overload

time.sleep(0.1)

besides KeyboardInterrupt:

move

lastly:

# Clear up

cap.launch()

cv2.destroyAllWindows()

board.shutdown()

print("Program ended and connection closed.")

This mission combines the facility of AI with sensible electronics to create a responsive system that may acknowledge and observe objects. By leveraging Python, YOLOv5, and Arduino, we will construct clever methods that work together with the bodily world.

Glad coding, and revel in bringing your tasks to life!