Injecting LTL and Automata into Neural Coverage Networks

Most reinforcement studying (RL) brokers are creatures of behavior: they be taught by reward, not by motive. They discover blindly, optimize over time, and sometimes overlook that actions have penalties… generally irreversible ones. In high-stakes environments like autonomous driving, surgical robotics, or mission planning, that’s unacceptable.

What if we might inject guidelines into an agent’s very neural structure: not publish hoc as constraints, however inside the coverage itself?

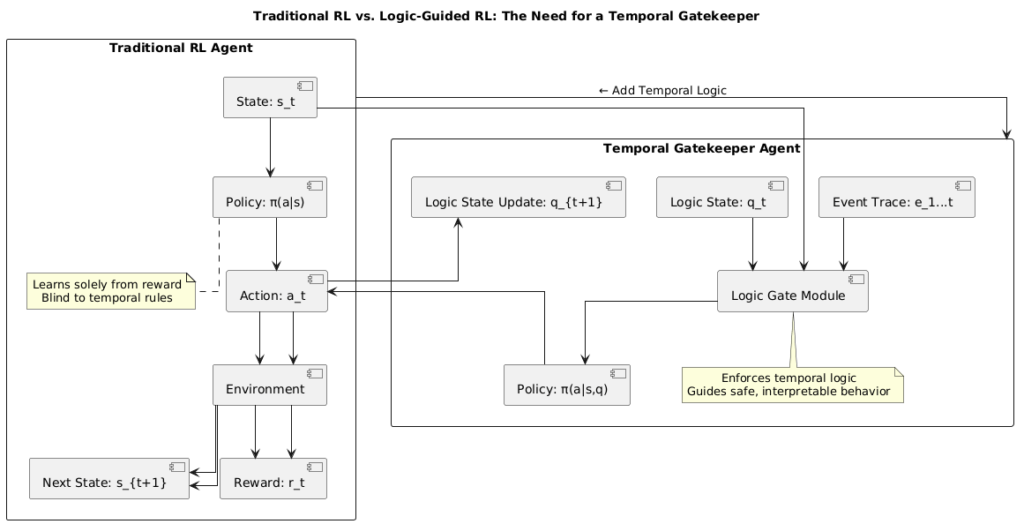

At the moment we introduce an idea we name the Temporal Gatekeeper: a reinforcement studying structure embedded with temporal logic gates, enabling it to motive over time, about time, and with guidelines. These guidelines aren’t hand-wavy heuristics, they’re formal logic: Linear Temporal Logic (LTL), finite automata, and Boolean constraints.

This publish exhibits how symbolic reasoning and neural optimization can co-exist inside a single coverage mannequin. It’s not simply “Reinforcement Studying + Logic”: it’s studying structured conduct from structured guidelines.