MLflow is an open-source platform designed to streamline the machine studying (ML) lifecycle. Amongst its core functionalities, MLflow Tasks enable customers to bundle ML code in a reproducible format, making it simple to share and deploy fashions throughout varied environments.

Azure Databricks, a managed Apache Spark platform, gives scalable computing energy to run MLflow Tasks effectively. This information explores how one can execute MLflow Tasks on Azure Databricks, highlighting new options launched in MLflow 2.14.

An MLflow Undertaking is a structured format that packages machine studying code, guaranteeing reproducibility and ease of execution. It sometimes contains:

- MLproject File: A YAML file defining entry factors and dependencies.

- Surroundings Specification: Both

conda.yaml,python_env.yaml, or Docker configuration. - Entry Factors: Scripts (

.pyor.sh) executed with specified parameters.

title: MyProjectentry_points:

predominant:

parameters:

data_file: path

regularization: {sort: float, default: 0.1}

command: "python prepare.py --data_file {data_file} --regularization {regularization}"

MLflow integrates seamlessly with Azure Databricks, enabling scalable execution of ML workflows. With Databricks Runtime 13.0 ML and later, MLflow Tasks now run solely as Databricks Spark Jobs, utilizing a brand new structured format.

This format is required when operating MLflow Tasks on Databricks job clusters.

Key Issues:

- The

MLprojectfile should outline both: databricks_spark_job.python_fileentry_points- If each or neither are outlined, MLflow raises an error.

title: MyDatabricksProjectdatabricks_spark_job:

python_file: "prepare.py"

parameters: ["--param1", "value1"]

python_libraries:

- mlflow==2.14

- scikit-learn

title: MyDatabricksSparkProjectdatabricks_spark_job:

python_libraries:

- mlflow==2.14

- numpy

entry_points:

predominant:

parameters:

script_name: {sort: string, default: prepare.py}

model_name: {sort: string, default: my_model}

command: "python {script_name} {model_name}"

To run the undertaking, execute:

mlflow run . -b databricks --backend-config cluster-spec.json

-P script_name=prepare.py -P model_name=model123

--experiment-id

To execute an MLflow Undertaking on Azure Databricks, use the command:

mlflow run -b databricks --backend-config

Instance Cluster Specification:

{

"spark_version": "13.0.x-scala2.12",

"num_workers": 2,

"node_type_id": "Standard_DS3_v2"

}

To put in dependencies on employee nodes:

{

"new_cluster": {

"spark_version": "13.0.x-scala2.12",

"num_workers": 2,

"node_type_id": "Standard_DS3_v2"

},

"libraries": [

{ "pypi": { "package": "tensorflow" } },

{ "pypi": { "package": "mlflow" } }

]

}

To allow SparkR, set up and import it inside your MLflow Undertaking:

if (file.exists("/databricks/spark/R/pkg")) {

set up.packages("/databricks/spark/R/pkg", repos = NULL)

} else {

set up.packages("SparkR")

}library(SparkR)

sparkR.session()

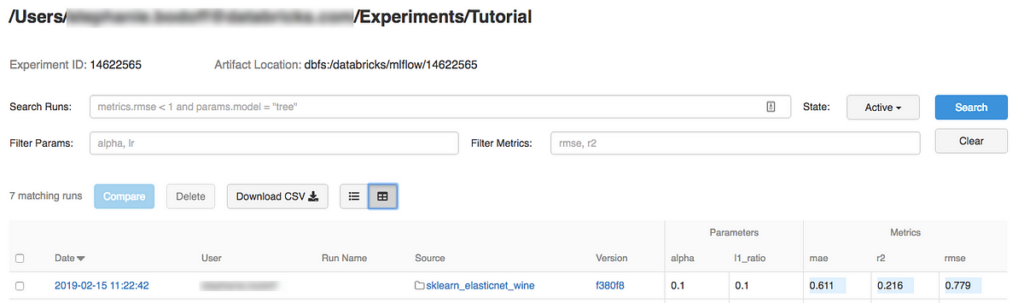

This instance exhibits how one can create an experiment, run the MLflow tutorial undertaking on an Azure Databricks cluster, view the job run output, and look at the run within the experiment.

- Set up MLflow utilizing

pip set up mlflow. - Set up and configure the Databricks CLI. The Databricks CLI authentication mechanism is required to run jobs on an Azure Databricks cluster.

- Within the workspace, choose Create > MLflow Experiment.

- Within the Title area, enter

Tutorial. - Click on Create. Notice the Experiment ID. On this instance, it’s

14622565.

The next steps arrange the MLFLOW_TRACKING_URI atmosphere variable and run the undertaking, recording the coaching parameters, metrics, and the educated mannequin to the experiment famous within the previous step:

- Set the

MLFLOW_TRACKING_URIatmosphere variable to the Azure Databricks workspace.

export MLFLOW_TRACKING_URI=databricks

2. Run the MLflow tutorial undertaking, coaching a wine model. Exchange

mlflow run https://github.com/mlflow/mlflow#examples/sklearn_elasticnet_wine -b databricks --backend-config cluster-spec.json --experiment-id

=== Fetching undertaking from https://github.com/mlflow/mlflow#examples/sklearn_elasticnet_wine into /var/folders/kc/l20y4txd5w3_xrdhw6cnz1080000gp/T/tmpbct_5g8u ===

=== Importing undertaking to DBFS path /dbfs/mlflow-experiments//projects-code/16e66ccbff0a4e22278e4d73ec733e2c9a33efbd1e6f70e3c7b47b8b5f1e4fa3.tar.gz ===

=== Completed importing undertaking to /dbfs/mlflow-experiments//projects-code/16e66ccbff0a4e22278e4d73ec733e2c9a33efbd1e6f70e3c7b47b8b5f1e4fa3.tar.gz ===

=== Operating entry level predominant of undertaking https://github.com/mlflow/mlflow#examples/sklearn_elasticnet_wine on Databricks ===

=== Launched MLflow run as Databricks job run with ID 8651121. Getting run standing web page URL... ===

=== Test the run's standing at https://#job//run/1 ===

3. Copy the URL https:// within the final line of the MLflow run output.

- Open the URL you copied within the previous step in a browser to view the Azure Databricks job run output:

- Navigate to the experiment in your Azure Databricks workspace.

2. Click on the experiment.

3. To show run particulars, click on a hyperlink within the Date column.

You possibly can view logs out of your run by clicking the Logs hyperlink within the Job Output area.

For some instance MLflow initiatives, see the MLflow App Library, which comprises a repository of ready-to-run initiatives geared toward making it simple to incorporate ML performance into your code.