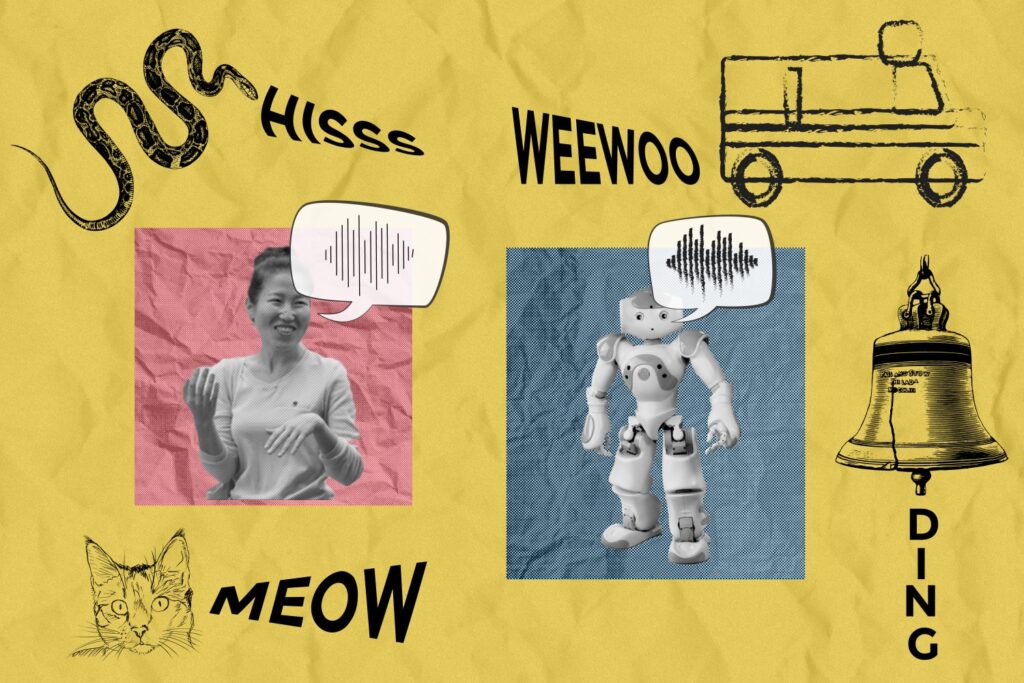

Whether or not you’re describing the sound of your defective automotive engine or meowing like your neighbor’s cat, imitating sounds together with your voice could be a useful technique to relay an idea when phrases don’t do the trick.

Vocal imitation is the sonic equal of doodling a fast image to speak one thing you noticed — besides that as a substitute of utilizing a pencil for example a picture, you employ your vocal tract to specific a sound. This might sound troublesome, however it’s one thing all of us do intuitively: To expertise it for your self, attempt utilizing your voice to reflect the sound of an ambulance siren, a crow, or a bell being struck.

Impressed by the cognitive science of how we talk, MIT Laptop Science and Synthetic Intelligence Laboratory (CSAIL) researchers have developed an AI system that may produce human-like vocal imitations with no coaching, and with out ever having “heard” a human vocal impression earlier than.

To attain this, the researchers engineered their system to provide and interpret sounds very similar to we do. They began by constructing a mannequin of the human vocal tract that simulates how vibrations from the voice field are formed by the throat, tongue, and lips. Then, they used a cognitively-inspired AI algorithm to regulate this vocal tract mannequin and make it produce imitations, bearing in mind the context-specific ways in which people select to speak sound.

The mannequin can successfully take many sounds from the world and generate a human-like imitation of them — together with noises like leaves rustling, a snake’s hiss, and an approaching ambulance siren. Their mannequin will also be run in reverse to guess real-world sounds from human vocal imitations, much like how some laptop imaginative and prescient methods can retrieve high-quality photographs primarily based on sketches. As an illustration, the mannequin can appropriately distinguish the sound of a human imitating a cat’s “meow” versus its “hiss.”

Sooner or later, this mannequin may probably result in extra intuitive “imitation-based” interfaces for sound designers, extra human-like AI characters in digital actuality, and even strategies to assist college students study new languages.

The co-lead authors — MIT CSAIL PhD college students Kartik Chandra SM ’23 and Karima Ma, and undergraduate researcher Matthew Caren — be aware that laptop graphics researchers have lengthy acknowledged that realism isn’t the last word purpose of visible expression. For instance, an summary portray or a toddler’s crayon doodle could be simply as expressive as {a photograph}.

“Over the previous few a long time, advances in sketching algorithms have led to new instruments for artists, advances in AI and laptop imaginative and prescient, and even a deeper understanding of human cognition,” notes Chandra. “In the identical means {that a} sketch is an summary, non-photorealistic illustration of a picture, our technique captures the summary, non-phono–lifelike methods people categorical the sounds they hear. This teaches us concerning the means of auditory abstraction.”

“The purpose of this undertaking has been to know and computationally mannequin vocal imitation, which we take to be the form of auditory equal of sketching within the visible area,” says Caren.

The artwork of imitation, in three elements

The group developed three more and more nuanced variations of the mannequin to match to human vocal imitations. First, they created a baseline mannequin that merely aimed to generate imitations that had been as much like real-world sounds as potential — however this mannequin didn’t match human conduct very nicely.

The researchers then designed a second “communicative” mannequin. In keeping with Caren, this mannequin considers what’s distinctive a couple of sound to a listener. As an illustration, you’d possible imitate the sound of a motorboat by mimicking the rumble of its engine, since that’s its most distinctive auditory function, even when it’s not the loudest facet of the sound (in comparison with, say, the water splashing). This second mannequin created imitations that had been higher than the baseline, however the group needed to enhance it much more.

To take their technique a step additional, the researchers added a last layer of reasoning to the mannequin. “Vocal imitations can sound completely different primarily based on the quantity of effort you set into them. It prices time and vitality to provide sounds which are completely correct,” says Chandra. The researchers’ full mannequin accounts for this by making an attempt to keep away from utterances which are very speedy, loud, or high- or low-pitched, which persons are much less possible to make use of in a dialog. The outcome: extra human-like imitations that intently match lots of the selections that people make when imitating the identical sounds.

After constructing this mannequin, the group performed a behavioral experiment to see whether or not the AI- or human-generated vocal imitations had been perceived as higher by human judges. Notably, contributors within the experiment favored the AI mannequin 25 % of the time generally, and as a lot as 75 % for an imitation of a motorboat and 50 % for an imitation of a gunshot.

Towards extra expressive sound know-how

Captivated with know-how for music and artwork, Caren envisions that this mannequin may assist artists higher talk sounds to computational methods and help filmmakers and different content material creators with producing AI sounds which are extra nuanced to a particular context. It may additionally allow a musician to quickly search a sound database by imitating a noise that’s troublesome to explain in, say, a textual content immediate.

Within the meantime, Caren, Chandra, and Ma are trying on the implications of their mannequin in different domains, together with the event of language, how infants study to speak, and even imitation behaviors in birds like parrots and songbirds.

The group nonetheless has work to do with the present iteration of their mannequin: It struggles with some consonants, like “z,” which led to inaccurate impressions of some sounds, like bees buzzing. In addition they can’t but replicate how people imitate speech, music, or sounds which are imitated in a different way throughout completely different languages, like a heartbeat.

Stanford College linguistics professor Robert Hawkins says that language is stuffed with onomatopoeia and phrases that mimic however don’t absolutely replicate the issues they describe, just like the “meow” sound that very inexactly approximates the sound that cats make. “The processes that get us from the sound of an actual cat to a phrase like ‘meow’ reveal quite a bit concerning the intricate interaction between physiology, social reasoning, and communication within the evolution of language,” says Hawkins, who wasn’t concerned within the CSAIL analysis. “This mannequin presents an thrilling step towards formalizing and testing theories of these processes, demonstrating that each bodily constraints from the human vocal tract and social pressures from communication are wanted to elucidate the distribution of vocal imitations.”

Caren, Chandra, and Ma wrote the paper with two different CSAIL associates: Jonathan Ragan-Kelley, MIT Division of Electrical Engineering and Laptop Science affiliate professor, and Joshua Tenenbaum, MIT Mind and Cognitive Sciences professor and Middle for Brains, Minds, and Machines member. Their work was supported, partly, by the Hertz Basis and the Nationwide Science Basis. It was offered at SIGGRAPH Asia in early December.