My Deep Studying journey began through the summer season of 2024, and since then I’ve been trying to find a correct YouTube video or weblog that describes the whole Math behind back-propagation. There are a lot of sources that superbly describe the instinct behind back-propagation, however by no means went into the Math utterly. There are fairly a couple of sources that do contact the Math of back-propagation however none of them had been capable of fulfill me.

So this summer season I made a decision to create my very own weblog discussing the Math behind Again-propagation. Hopefully this weblog fills the small gaps current within the already huge quantity of knowledge that’s current on the Web concerning Again-propagation. Earlier than beginning this weblog I’d extremely suggest you to look at the primary 4 movies of this YouTube playlist. This playlist of movies from 3Blue1Brown superbly explains the instinct behind back-propagation.

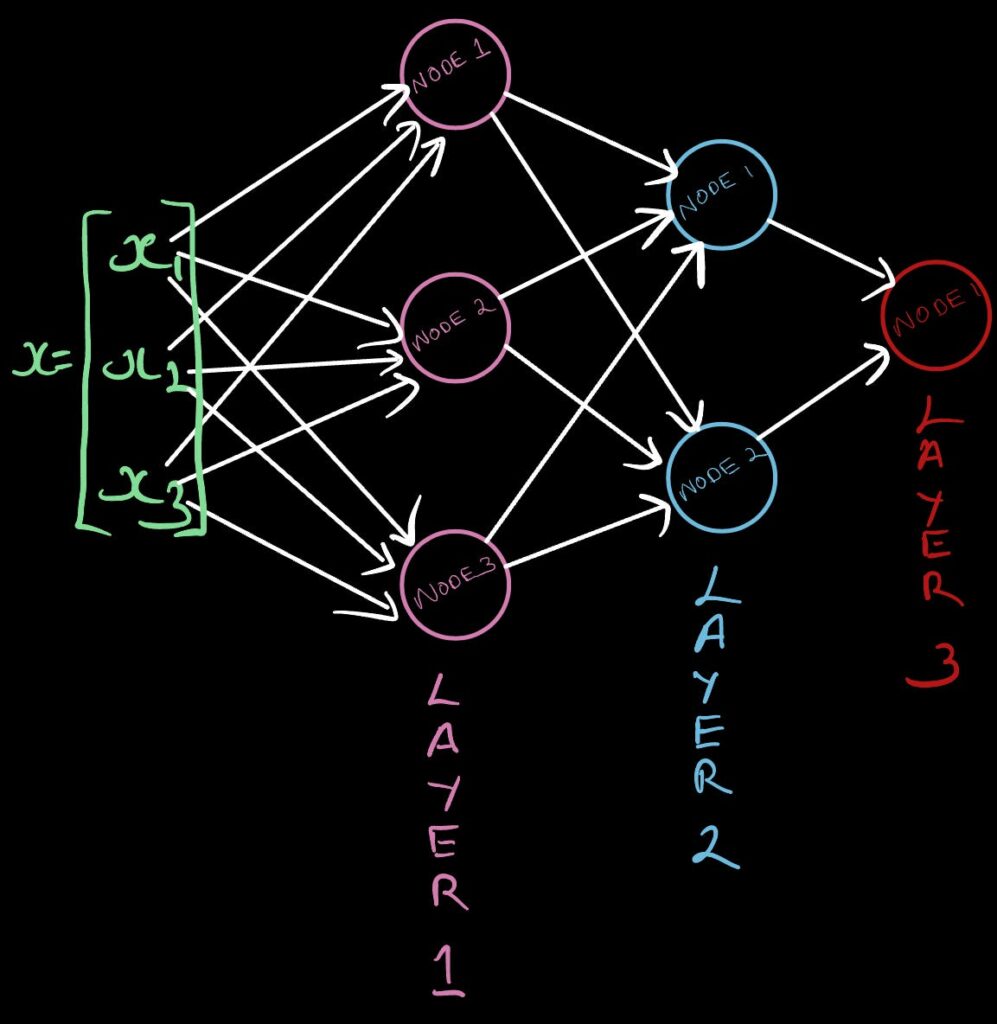

Get a pen and a pocket book with the intention to work together with me. The easiest way to know any Math is to do it… Now let’s arrange the neural community that we’ll be utilizing to know the Math. We shall be utilizing a easy 3 layer neural community.

Here is our 3 layer binary classification neural community. We’re utilizing tanh activation features for layers 1 and a pair of. For the ultimate layer we shall be utilizing the sigmoid activation operate.

For the sake of simplicity we shall be assuming that coaching is completed utilizing a batch dimension of 1 — that means each step within the coaching is completed with only one coaching instance relatively than utilizing a extra typical batch dimension of 16 or 32.

Notations:

Listed here are all of the parameters and outputs in each layer:

Enter Layer:

Layer 1:

Layer 2:

Layer 3:

Earlier than transferring on to ahead and backward propagation there are a few Mathematical ideas that you could familiarize your self with. Be at liberty to comeback to this part of the weblog if you must.

Transpose

Transpose of a matrix is principally one other matrix the place the rows of the brand new matrix are the columns of the previous matrix and vise-versa.

Matrix Multiplication

The golden rule for matrix multiplication between two matrices A and B (AXB) is that the variety of columns in matrix A have to be equal to the quantity rows in matrix B. The resultant matrix can have the identical variety of rows as matrix A and the identical variety of columns as matrix B. Right here is the way you really carry out matrix multiplication:

Differentiation

Differentiation allows us to search out the speed of change of a operate with respect to the variable that the operate is determined by. Listed here are a couple of necessary differentiation equations that one should know

Partial differentiation

Partial differentiation allows us to search out the speed of change of a operate with respect to one of many many variables it’s depending on. Each time you’re doing partial differentiation with respect to a specific variable, we assume all different variables to be constants and apply the principles of regular differentiation. Watch this video for examples. For our explicit state of affairs we should first perceive the right way to carry out partial differentiation on a scalar with respect to a vector and on a vector with respect to a vector:

Forward propagation refers back to the strategy of sending our inputs by means of the neural community to get a prediction. Earlier than performing back-propagation, we should first carry out ahead propagation because the we want the outputs of ahead propagation to carry out back-propagation.

You would possibly discover using transpose when multiplying two vectors. Transpose ensures that the shapes of the matrices being multiplied are in compliance with the principles of matrix multiplication. In the event you want a refresher on Matrix multiplication guidelines take a look at this YouTube video

Layer 1 Outputs from ahead propagation:

Layer 2 Outputs from ahead propagation:

Now the output from layer 1 turns into the enter for layer 2

Layer 3 Outputs from ahead propagation:

Now the output from layer 2 turns into the enter for layer 3

Now as we’ve all the outcomes from our ahead propagation let’s transfer on to again propagation

Again propagation refers back to the propagating the error from the output layer to the sooner layers of the community and making corrections utilizing gradient descent with a purpose to scale back loss and enhance the general accuracy and precision of the mannequin. The loss operate that we’ll be utilizing in our case is Binary Cross Entropy. In actual world purposes of Binary classification, throughout coaching the loss operate used is Binary Cross Entropy Loss with logits. However for the aim of simplicity we shall be utilizing the traditional Binary Cross Entropy Loss.

Right here is the equation that describes the Binary Cross Entropy Loss

One thing that I had struggled throughout my first couple of weeks is mixing up what the loss and value features the place. The loss operate (represented utilizing ‘L’) measures the error of our mannequin for a single coaching instance whereas the associated fee operate (represented utilizing ‘J’) measures the typical error of our mannequin for a single batch of coaching examples.

As we had assumed the batch dimension to be 1 for the sake of simplicity, the loss and the associated fee features would be the identical.

Discovering partial derivatives for again propagation updates at layer 3

Discovering partial derivatives for again propagation updates at layer 2

Discovering partial derivatives for again propagation updates at layer 1

Updates to the parameters

Now we’ve discovered all of the partial derivatives required for updating parameters throughout all layers. Utilizing equation 4 in determine 4.3.6, 6 in determine 4.3.9, 4 in determine 4.4.12, 6 in determine 4.4.17, 4 in determine 4.5.11 and 6 in determine 4.5.15 we will carry out parameter updates utilizing the next equations.

Phewww!🤯 That was one hell of a journey. Hopefully you didn’t instantly soar to the conclusion… And in the event you did I actually don’t blame you 😜. These items is actually exhausting and you’ll by no means be requested to do such derivations in a job interview. So that is simply to train and flex your Math muscle. However in the event you really went by means of every step one after the other, hats off buddy!🥳