This submit reimagines the mechanics of Help Vector Machines (SVM) via a story-driven metaphor of a village divided. Past the narrative, we dive into the instinct behind linear SVMs — how they assemble a hyperplane that maximizes the geometric margin between two lessons. We clarify the roles of help vectors, the practical margin, and the way the route vector w governs the orientation and classification confidence. Whether or not you’re a newbie or brushing up on fundamentals, this piece provides a conceptual bridge between storytelling and math.

As soon as upon a time, there was a village known as Ascots, a peaceable village the place two teams Orange Ascots and Blue Ascots lived fortunately. However on one tragic day, a struggle broke out and the disagreement sparked a stress that could not be resolved. They can not relocate to new place, bloodshed to be prevented and above all of the peace needed to be restored.

Thus, a choice was made by the village’s elder Ascot:

A boundary have to be drawn — a fence that may separate the 2 teams as a lot as attainable to keep away from any future conflicts.

Not Simply Distance, However Confidence

One query now troubled the elder:

How ought to this fence be designed, and the place precisely ought to it’s constructed?

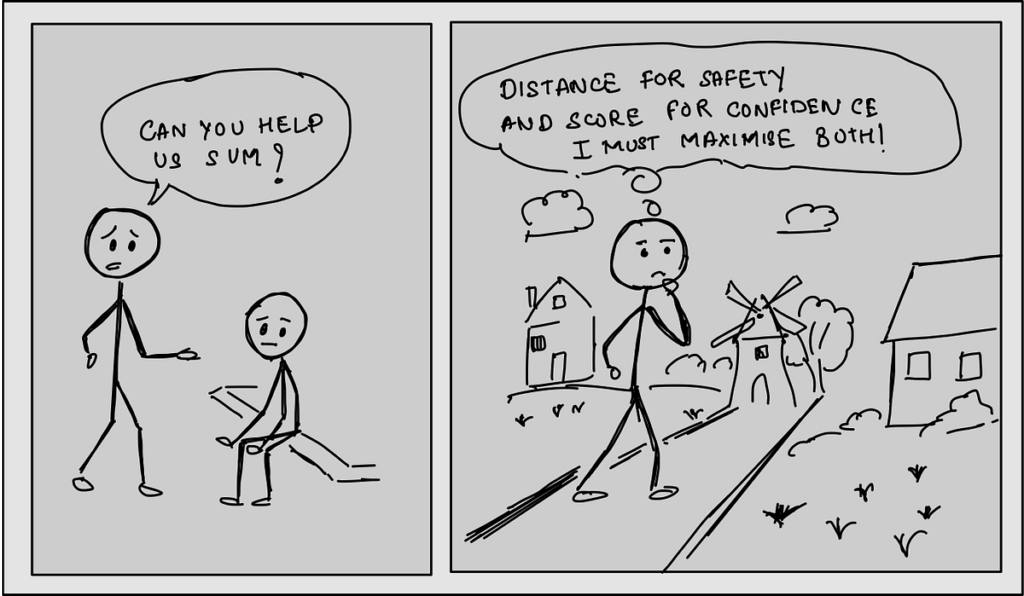

Not sure of the reply, he sought the assistance of a clever downside solver of the land — SVM. Upon accepting the problem, SVM went for a stroll within the village pondering deeply:

“If I place a fence, how shut will the closest home be on both facet?”

This thought led to the core concept from a easy idea known as the geometric margin :

- the precise distance from the fence (hyperplane) to the closest information level (home).

- and we need to maximize this margin ➕.

However SVM wasn’t solely fascinated about distance. He additionally wished to be assured in inserting every villager’s home on the proper facet of the boundary.

- Optimistic ✅ in the event that they have been on the best facet of the fence

- Detrimental ❌ if not

That is known as the practical margin:

- it’s not the bodily distance however a confidence rating — how nicely the info level (home) was categorized and the way far (in signal and scale) it’s from the choice boundary.

SVM realized to construct the perfect fence, he needed to think about each rating and the distance. So he labored out on the plan and visited the homes that matter essentially the most — the ones closest to the boundary, those that may help his determination. He additionally known as upon the elder to debate his technique.

The Help Vectors

Ascot the Elder, wished to grasp how the fence was drawn and so SVM explains –

- There could possibly be a number of fences (determination boundaries) between two teams.

- However the optimum one he discovered, makes positive the distance between the fence and the home of villagers positioned on both facet of the road is most.That’s the reason he met a number of the villagers, calling them — support vectors. The Elder exclaimed:

“Ah, so the fence is positioned primarily based on the closest villagers to make sure the most important hole between the 2 teams and truly outline the place the fence goes!”

The Equation Behind the Fence

SVM now explains concerning the practical margin equation.

- It represents a hyperplane in geometry — a flat floor (like a line in 2D) that separates the house

The fence isn’t only a line:

- it has a route (which means it’s tilted) ↖️

- and a place (how excessive or low it sits) ↕️

That is what is known as the practical margin. It doesn’t simply inform us ‘how far’ — it tells us ‘how assured’ we’re that every villager is on the proper facet.It makes use of:

- the route vector (w) ➡️.

- and a bias time period (b) to explain the fence absolutely.

- The route comes from w: it tells which means the fence tilts.

- The place is adjusted by b: it shifts the fence up or down.

“Think about every villager’s house is a degree with two coordinates (x₁, x₂).

After I multiply that by a route vector (w₁, w₂), I’m checking how nicely the home aligns with that route.

So now, if the result’s optimistic as per the hyperplane equation, they’re to be positioned on the optimistic class — in any other case on the destructive class facet.”

Elder regarded a bit confused 🤔

“Hmm… is smart, however how do you resolve which facet is for optimistic class and which is destructive?”

SVM defined, with easy analogy of door-in-the-fence, “Think about this fence has door in it and the door swings open within the route of w. The facet it opens in direction of is the optimistic class. The facet it swings away from is the destructive class.”

Lets attempt to perceive ideas until right here by taking small information and making use of linear svm utilizing scikit-learn.

import numpy as np

import matplotlib.pyplot as plt

from sklearn import svm# lets take some pattern factors for two lessons

X = np.array([

[2, 2], # Class +1

[2, 3],

[3, 2],

[6, 5], # Class -1

[7, 8],

[8, 6]

])

# outline true labels

y = np.array([1, 1, 1, -1, -1, -1])

clf = svm.SVC(kernel='linear')

# match to our pattern information

clf.match(X, y)

# extracting w and b

w = clf.coef_[0]

b = clf.intercept_[0]

Right here we get w: [-0.33333333 -0.33333333], the place w[0] corresponds to x-axis (consider 1st characteristic) and w[1] corresponds to y-axis (2nd characteristic). Subsequent let’s attempt plotting the factors with determination boundary and margin traces.

plt.scatter(X[y==1][:, 0], X[y==1][:, 1], shade='orange', label='Class +1 (Orange Ascots)', edgecolors='ok')

plt.scatter(X[y==-1][:, 0], X[y==-1][:, 1], shade='blue', label='Class -1 (Blue Ascots)', edgecolors='ok')# for determination boundary - easy steady line lets take factors from linspace

x_vals = np.linspace(0, 10, 100)

# compute decsion boundary values

y_vals = -(w[0] * x_vals + b) / w[1]

# compute margin distance from descision boundary to help vectors

margin = 1 / np.sqrt(np.sum(w ** 2))

# we are going to margin on each the edges

y_margin_pos = y_vals + margin

y_margin_neg = y_vals - margin

# now lets plot them

plt.plot(x_vals, y_vals, 'k-', label='Choice Boundary')

plt.plot(x_vals, y_margin_pos, 'k--', label='Margin (+1)')

plt.plot(x_vals, y_margin_neg, 'k--', label='Margin (-1)')

# legend and labels

plt.legend()

plt.title("Linear SVM with Choice Boundary and Margins")

plt.xlabel("Characteristic 1")

plt.ylabel("Characteristic 2")

plt.grid(True)

plt.present()

Now SVM needs us to check out a brand new level [2.5, 2.5] and see to what class it will get assigned to.

# lets check a brand new level

test_point = np.array([2.5, 2.5])

# our determination boundary equation

svm_score = np.dot(w, test_point) + b

print(f"Practical margin: {svm_score}")

The offers out svm rating as 1 and will get class output of +1.

Elder asks “Oh, attention-grabbing — that is smart now! However if you defined the route of w with the door-in-the-fence analogy, what if w factors in the wrong way? Does that imply the door opens the opposite means — and the lessons get switched?”

SVM replies “Precisely! When w factors in the wrong way, the choice boundary stays the identical, however the classification flips — what was as soon as the optimistic facet turns into destructive, and vice versa.” The beneath plot exhibits how flipping w and b adjustments classification outcomes, though the geometric boundary would not transfer.

Yow will discover the correct code for the above right here https://github.com/luaGeeko/MediumMLToons/blob/main/SVM_tutorial.ipynb.

Now wrapping up Half 1, the place we laid the muse by understanding the practical margin and geometric margin — two essential ideas that assist us measure how nicely our Help Vector Machine (SVM) separates lessons with most confidence. Within the subsequent half, we’ll take this a step additional and discover how one can optimize the choice boundary to, introduce the function of constraints on this optimization, and uncover the distinction between onerous and mushy margins — important concepts in SVMs.