“Gradual pondering (system 2) is effortful, rare, logical, calculating, aware.”

— Daniel Kahneman, Pondering, Quick and Gradual

Massive Language fashions (LLMs) corresponding to ChatGPT typically behave as what Daniel Kahneman—winner of the 2002 Nobel Memorial Prize in Financial Sciences—defines as System 1: it’s quick, assured and nearly easy. You might be proper: there’s additionally a System 2, a slower, extra effortful mode of pondering.

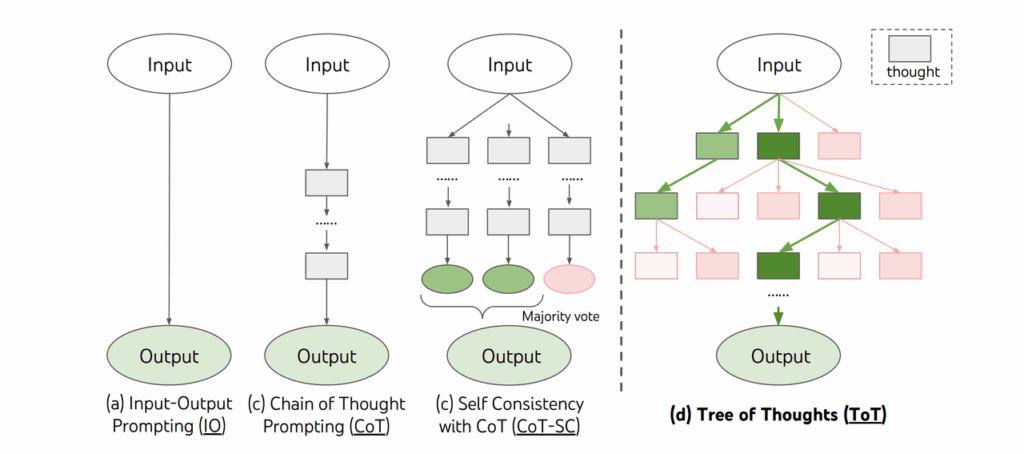

Researchers have been creating strategies these years to convey System 2-style pondering into LLMs by means of higher prompting methods. Tree-of-Thought (ToT) prompting is one essentially the most excellent methods and it permits the mannequin to comply with a number of reasoning paths resulting in doubtlessly higher selections.

On this weblog put up, I’ll do a case examine the place a ToT-powered LLM agent performs the traditional recreation Minesweeper — not by guessing, however by reasoning. Identical to you’ll.

CoT and ToT

We’d begin our story with Chain-of-Thought (CoT) prompting — a method that guides LLMs to motive step-by-step. Have a look at the next instance:

Q: I purchased 10 apples within the grocery store. Then I gave 2 apples to the neighbor and one other 2 to my pigs. Not sufficient apples for me now!!I then purchased 5 extra apples from a grocery however 1 was rotten. What number of apples now?

A: You used to have 10 apples. You gave 2 apples to the neighbor and a couple of to the pigs. Now you may have 6 apples. Then you definitely purchased 5 extra apples, so that you had 11 apples. Lastly, you ate 1 apple, so you continue to have 10 apples in the long run.

As you’ll be able to see, guided to assume step-by-step, the LLM simulates higher reasoning.

Tree-of-Thought (ToT) prompting expands on CoT. Because the identify suggests, It organizes reasoning like a tree the place every node is a possible “thought,” and branches are potential paths. It doesn’t comply with a linear course of like CoT.

In apply, ToT creates a tree construction with branches, every with sub-steps resulting in a ultimate decision. The mannequin then evaluates every step, assigning every concept a classification as “certain”, “probably” or “unattainable”. Subsequent, ToT goes by means of all the drawback house by making use of search algorithms corresponding to Breadth-First Search (BFS) and Depth-First Search (DFS) as a way to select the perfect paths.

Right here is an instance in an workplace constructing: I’ve an workplace with a max capability of 20 folks, however 28 persons are coming this week. I’ve the next three branches as potential options:

- Department 1: Transfer 8 folks

- Is there one other room close by? → Sure

- Does it have house for 8 extra folks? → Sure

- Can we transfer folks with none administrative course of? → Perhaps

- Analysis: Promising!

- Department 2: Broaden the Room

- Can we make the room bigger? → Perhaps

- Is that this allowed below security? → No

- Can we request an exception within the constructing? → No

- Analysis: It wont work!

- Department 3: Cut up the group into two

- Can we divide these folks into two teams? → Sure

- Can we allow them to come on totally different days? → Perhaps

- Analysis: Good potential!

As you’ll be able to see, this course of mimics how we resolve arduous issues: we don’t assume in a straight line. As an alternative, we discover, consider, and select.

Case examine

Minesweeper

It’s nearly unattainable however In case you don’t know, minesweeper is a straightforward online game.

The board is split into cells, with mines randomly distributed. The quantity on a cell exhibits the variety of mines adjoining to it. You win while you open all of the cells. Nonetheless, when you hit a mine earlier than opening all of the cells, the sport is over and also you lose.

We’re making use of ToT in Minesweeper which requires some logic guidelines and reasoning below constraints.

We simulate the sport with the next code:

# --- Sport Setup ---

def generate_board():

board = np.zeros((BOARD_SIZE, BOARD_SIZE), dtype=int)

mines = set()

whereas len(mines) You’ll be able to see that we generate a BOARD_SIZE*BOARD_SIZE measurement board with NUM_MINES mines.

ToT LLM Agent

We are actually able to construct our ToT LLM agent to resolve the puzzle of minesweeper. First, we have to outline a perform that returns thought on the present board through the use of an LLM corresponding to GPT-4o.

def llm_generate_thoughts(board, revealed, flagged_mines, known_safe, ok=3):

board_text = board_to_text(board, revealed)

valid_moves = [[r, c] for r in vary(BOARD_SIZE) for c in vary(BOARD_SIZE) if not revealed[r][c] and [r, c] not in flagged_mines]

immediate = f"""

You might be taking part in a 8x8 Minesweeper recreation.

- A quantity (0–10) exhibits what number of adjoining mines a revealed cell has.

- A '?' means the cell is hidden.

- You may have flagged these mines: {flagged_mines}

- You recognize these cells are protected: {known_safe}

- Your job is to decide on ONE hidden cell that's least prone to include a mine.

- Use the next logic:

- If a cell exhibits '1' and touches precisely one '?', that cell have to be a mine.

- If a cell exhibits '1' and touches one already flagged mine, different neighbors are protected.

- Cells subsequent to '0's are typically protected.

You may have the next board:

{board_text}

Listed below are all legitimate hidden cells you'll be able to select from:

{valid_moves}

Step-by-step:

1. Listing {ok} potential cells to click on subsequent.

2. For every, clarify why it may be protected (based mostly on adjoining numbers and identified data).

3. Charge every transfer from 0.0 to 1.0 as a security rating (1 = positively protected).

Return your reply on this actual JSON format:

[

{{ "cell": [row, col], "motive": "...", "rating": 0.95 }},

...

]

"""

attempt:

response = consumer.chat.completions.create(

mannequin="gpt-4o",

messages=[{"role": "user", "content": prompt}],

temperature=0.3,

)

content material = response.decisions[0].message.content material.strip()

print("n[THOUGHTS GENERATED]n", content material)

return json.hundreds(content material)

besides Exception as e:

print("[Error in LLM Generation]", e)

return []This may look a little bit lengthy however the important a part of the perform is the immediate half which not solely explains the principles of the sport to the LLM (learn how to perceive the board, which strikes are legitimate, and so on. ) and likewise the reasoning behind every legitimate transfer. Furthermore, it tells learn how to assign a rating to every potential transfer. These assemble our branches of ideas and eventually, our tree ToT. For instance, we now have a step-by-step information:

1. Listing {ok} potential cells to click on subsequent.

2. For every, clarify why it may be protected (based mostly on adjoining numbers and identified data).

3. Charge every transfer from 0.0 to 1.0 as a security rating (1 = positively protected).These traces information the LLM to suggest a number of strikes and to justify every of those strikes based mostly on the present state; it then has to guage every of those potential strikes by a rating starting from 0 to 1. The agent will use these scores to search out the most suitable choice.

We now construct an LLM agent utilizing these generated ideas to maneuver a “actual” transfer. Contemplate the next code:

def tot_llm_agent(board, revealed, flagged_mines, known_safe):

ideas = llm_generate_thoughts(board, revealed, flagged_mines, known_safe, ok=5)

if not ideas:

print("[ToT] Falling again to baseline agent as a consequence of no ideas.")

return baseline_agent(board, revealed)

ideas = [t for t in thoughts if 0 = 0.9:

move = tuple(t["cell"])

print(f"[ToT] Confidently selecting {transfer} with rating {t['score']}")

return transfer

print("[ToT] No high-confidence transfer discovered, utilizing baseline.")

return baseline_agent(board, revealed)The agent first calls the LLM to counsel a number of potential subsequent strikes with the arrogance rating. If the LLM fails to return any thought, the agent will fall again to a baseline agent outlined earlier and it could possibly solely make random strikes. If we’re lucky sufficient to get a number of strikes proposed by the LLM, the agent will don a primary filter to exclude invalid strikes such these which fall out of the board. It is going to then type the legitimate ideas in response to the arrogance rating in a descending order and returns the perfect transfer if the rating is larger than 0.9. If not one of the solutions are larger than this threshold, it falls again to the baseline agent.

Play

We are going to now attempt to play a normal 8×8 Minesweeper board recreation with 10 hidden mines. We performed 10 video games and reached an accuracy of 100%! Please examine the notebook for full codes.

Conclusion

ToT prompting provides LLMs corresponding to GPT-4o extra reasoning capability, going past quick and intuitive pondering. We now have utilized ToT to the Minesweeper recreation and acquired good outcomes. This instance exhibits that the ToT can remodel LLMs from chat assistants to difficult drawback solvers with actual logic and reasoning capability.