After I first began studying about machine studying, I used to be desirous about how a number of the easiest concepts may produce essentially the most highly effective outcomes. Amongst these, one methodology that significantly stood out was Random Forests. At first, the title intrigued me. What precisely may very well be “random” about constructing a mannequin? Might randomness actually enhance prediction accuracy?

This work confirmed me why random forests grew to become so fashionable and proceed to be extensively used at the moment. They mix a number of easy fashions to create a robust predictive system, turning randomness into their best power. Let’s deep dive into Breiman’s landmark paper in easy phrases and see why this mannequin has been so essential for many years.

Think about asking not only one good friend however an entire group of associates for recommendation after which going with the bulk opinion. That’s the essential thought behind Random Forests.

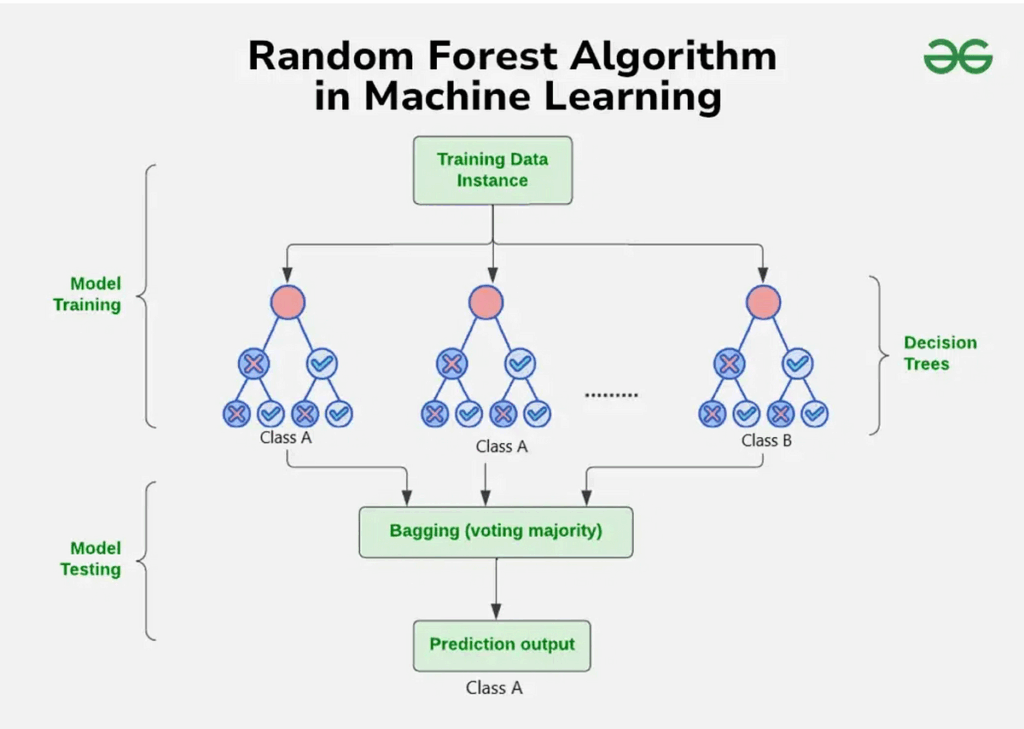

A Random Forest is a sort of mannequin that mixes the facility of many resolution timber to make higher predictions. Right here’s the way it works in plain phrases:

Step 1: Resolution Timber — The Constructing Blocks

A resolution tree is sort of a flowchart that asks a sequence of sure/no questions concerning the information (e.g., “Is the individual’s age over 30?”) and finally decides (like predicting if somebody will purchase a product). However a single tree could make errors or get too targeted on the coaching information.

Step 2: Make Many Timber — That’s the “Forest”

As a substitute of utilizing only one tree, Random Forests make tons of them, generally lots of. Every tree is educated on a distinct random a part of the information. That is referred to as bagging. It helps scale back errors by combining opinions from many timber.

Step 3: Add Randomness for Higher Outcomes

When every tree is being constructed, it solely seems to be at a random subset of the options (like just a few columns of your information at a time). This randomness helps make the timber completely different from one another, which is an efficient factor, because it stops them from all making the identical errors.

Ultimate Step: Majority Wins!

After all of the timber are constructed, they vote on the prediction. For instance, if most timber say “sure” and some say “no”, the forest will go along with “sure”. This voting system results in higher total accuracy.

Leo Breiman’s paper didn’t simply introduce the thought of Random Forests. It laid the inspiration for why they work so properly. Listed here are the principle concepts, defined in easy phrases:

1. Random Forests Don’t Overfit Simply

In machine studying, one frequent downside is overfitting. When a mannequin is simply too targeted on the coaching information and doesn’t do properly on new information. Breiman confirmed that as you develop extra timber in a Random Forest, the general mannequin doesn’t overfit, due to the randomness and averaging.

2. The “Margin” Thought

The paper launched the thought of a margin, which measures how assured the forest is about its prediction. If many timber vote for the proper reply and just a few disagree, that’s a big margin and an indication of a assured mannequin. Bigger margins often imply higher efficiency.

3. Energy and Correlation

Breiman defined that the forest works greatest when:

- The timber are sturdy (they make correct predictions), and

- The timber are not too comparable to one another (low correlation).

This stability is what provides Random Forests their energy. An excessive amount of similarity? The forest doesn’t acquire a lot from having many timber.

4. Out-of-Bag (OOB) Estimates

One of many coolest methods within the paper is using out-of-bag samples. These are the information factors that had been omitted when coaching every tree. These can be utilized to check the mannequin while not having a separate validation set. Meaning much less work and extra environment friendly coaching!

To check how properly Random Forests really carry out, Breiman ran experiments utilizing real-world datasets (like breast most cancers detection, handwritten digits, and extra) and did a comparative examine on them with different fashionable strategies like AdaBoost and single resolution timber.

Right here’s what stood out from his findings:

1. Random Forests vs. AdaBoost

Despite the fact that AdaBoost was one of many prime fashions on the time, Random Forests matched and even beat its accuracy in lots of circumstances. What’s even higher? Random Forests dealt with noisy information (when labels are incorrect or messy) significantly better than AdaBoost.

Instance: On datasets the place 5% of the labels had been randomly modified (to simulate noise), AdaBoost’s accuracy dropped loads — however Random Forests barely flinched.

2. Extra Timber = Higher Efficiency (As much as a Level)

Breiman confirmed that including extra timber often helps, however after a sure quantity, the development turns into smaller. The important thing takeaway: extra timber don’t damage.

3. Out-of-Bag (OOB) Estimates Labored Properly

As a substitute of setting apart a part of the information for testing, Random Forests use the leftover information (not utilized in coaching every tree) to self-evaluate. These “out-of-bag” estimates had been proven to be nearly pretty much as good as utilizing a full separate take a look at set, saving time and information.

- Very Correct, Very Dependable: Random Forests typically carry out in addition to or higher than different fashions, even with little or no tuning. Simply develop a bunch of timber, and it really works.

- Handles Noise: As seen within the experiments, even when the coaching information is messy (like labels are improper), Random Forests nonetheless handle to make good predictions.

- Constructed-in Testing: With out-of-bag estimates, you don’t want a separate validation set. The mannequin checks itself utilizing the information it didn’t see throughout coaching.

- Works for Each Classification and Regression: Whether or not you’re predicting classes (like spam vs. not spam) or numbers (like home costs), Random Forests can deal with each sorts.