The current explosion in capabilities of Massive Language Fashions (LLMs) like ChatGPT, Claude, and Gemini will not be solely because of architectural developments or sheer knowledge scale. A essential but typically much less mentioned element is the reward mannequinused through the Reinforcement Studying from Human Suggestions (RLHF) part. This part performs a pivotal position in aligning mannequin outputs with human expectations and preferences.

This weblog explores reward fashions in LLMs, detailing their position within the reinforcement studying course of. We’ll cowl their conceptual foundations, mannequin structure, coaching course of, inference, analysis strategies, and sensible purposes. By the top, you’ll have a holistic understanding of how reward fashions act because the bridge between uncooked mannequin era and human-aligned efficiency.

Reward fashions are designed to assign scalar values (rewards) to outputs of an LLM based mostly on how aligned these outputs are with desired human responses. As an alternative of relying purely on supervised fine-tuning, which has inherent limitations, reward fashions allow a extra dynamic suggestions mechanism.

Why Use Reward Fashions?

- Capturing preferences: Human preferences are nuanced and exhausting to encode explicitly.

- Dynamic supervision: Permits for studying from implicit suggestions quite than predefined labels.

- Optimization purpose: Converts human desire right into a trainable goal for reinforcement studying.

The reward mannequin acts as a proxy for human judgment, permitting the language mannequin to optimize for responses which are extra helpful, applicable, or correct.

The RLHF pipeline typically consists of three levels:

1.Supervised Nice-Tuning (SFT):

- Begin with a pretrained language mannequin.

- Nice-tune it utilizing labeled datasets (e.g., instruction-following knowledge).

2.Reward Mannequin Coaching:

- Acquire a number of mannequin responses to a immediate.

- Rank these responses utilizing human annotators.

- Practice the reward mannequin to foretell rankings or desire scores.

3.Reinforcement Studying (usually with PPO):

- Use the reward mannequin as the target perform.

- Generate candidate outputs.

- Nice-tune the language mannequin utilizing Proximal Coverage Optimization (PPO).

Structure Circulation

Immediate → LLM (generate outputs) → Reward Mannequin (rating outputs) → RL Agent (replace coverage)

A reward mannequin usually shares the identical spine because the LLM, however with a small adaptation:

- Enter: Concatenation of immediate and candidate response.

- Encoder: Transformer-based (normally a replica of the pretrained LLM).

- Head: A regression head (single neuron) outputs a scalar reward rating.

Some variations embrace:

- Utilizing pairwise comparability loss as an alternative of direct regression.

- Leveraging rank-based coaching to generalize higher to unseen responses.

Information Assortment

- Human annotators are proven a immediate and a number of responses.

- They rank the responses based mostly on helpfulness, correctness, and alignment.

- This generates (immediate, response1, response2, label) tuples.

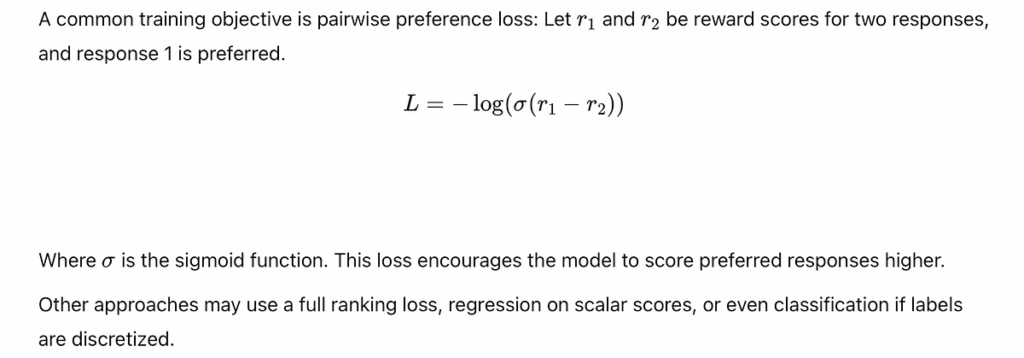

Coaching Goal

Concrete Instance

Let’s say now we have the next immediate:

Immediate: “What’s the capital of France?”

And two responses from the LLM:

- Response A: “The capital of France is Paris.”

- Response B: “France is a European nation with wealthy tradition.”

Human annotators choose Response A for its directness and accuracy.

As soon as educated, the reward mannequin turns into the core element within the RL fine-tuning step.

Proximal Coverage Optimization (PPO)

PPO is the commonest algorithm utilized in RLHF. It optimizes the LLM (coverage mannequin) by:

- Producing a brand new response.

- Scoring it with the reward mannequin.

- Calculating the benefit (how a lot better the response is than anticipated).

- Updating the mannequin to extend the chance of higher responses.

The important thing concept is to steadiness exploration and exploitation, enhancing responses with out deviating too removed from the preliminary supervised mannequin.

Mathematical Perception

Throughout inference or sampling, the reward mannequin will not be usually used straight. Nonetheless, it will possibly:

- Rank candidate outputs in beam search or sampling.

- Assist in on-line studying by persevering with RLHF on new queries.

- Act as a suggestions mechanism in human-in-the-loop pipelines.

How do we all know if the reward mannequin is doing its job?

Offline Metrics:

- Rating accuracy: Settlement with human desire knowledge.

- Spearman/Pearson correlation: Between predicted scores and human scores.

On-line Metrics:

- Human eval: Asking people to guage generated responses.

- A/B testing: Evaluating fashions educated with and with out reward fashions.

1. Information high quality

Human preferences are subjective. Biases and inconsistencies in rankings can misguide coaching.

2. Reward hacking

Fashions could exploit quirks within the reward mannequin, producing high-scoring however low-quality outputs.

3. Overfitting

Small datasets or slender annotation scopes can result in brittle reward fashions.

4. Scalability

Coaching and sustaining correct reward fashions for various duties is resource-intensive.

1. Chatbots and Assistants

Guarantee conversational brokers prioritize useful, protected, and correct responses.

2. Artistic Writing and Era

Information story or poem era to align with stylistic or thematic preferences.

3. Code Era

Align outputs with syntactic correctness, safety, or type guides.

4. Alignment Analysis

Utilized in frameworks like Constitutional AI or iterated distillation to steer mannequin habits.

5. Personalization

Reward fashions fine-tuned on particular person preferences allow extra tailor-made LLM habits.

As LLMs change into extra embedded in human workflows, reward fashions will evolve in sophistication:

- Multimodal Reward Fashions: Incorporate visible, audio, and tactile suggestions.

- Neural Desire Studying: Be taught from implicit suggestions like clicks or engagement.

- On-line Nice-Tuning: Steady enchancment by real-time consumer interactions.

- Collaborative Reward Studying: Incorporating group-based suggestions quite than particular person rankings.

Reward fashions are an indispensable a part of the LLM lifecycle. They rework subjective human judgment right into a scalable coaching sign, permitting language fashions to not solely generate believable outputs however accomplish that in a approach that aligns with human values. From enhancing security to growing relevance and utility, reward fashions are the unsung heroes powering the subsequent era of aligned AI.

Understanding how they work — from conceptual design by to utility — presents priceless perception into how fashionable AI methods are educated to behave not simply intelligently, however ethically and usefully as nicely.

Keep tuned for a follow-up the place we’ll examine completely different reward modeling methods and dive deeper into the structure and engineering trade-offs.