Machine studying fashions typically carry out higher when the enter knowledge is symmetric or near a standard distribution. Right here’s why skewness generally is a drawback:

- Biased Predictions: Skewed knowledge can lead fashions to focus an excessive amount of on the “tail” values, skewing predictions.

- Assumption Violation: Algorithms like linear regression assume normality for optimum outcomes.

- Outliers: Skewed distributions typically have outliers, which might confuse fashions.

To repair this, we preprocess the info by decreasing skewness — generally utilizing transformations like logarithms, sq. roots, or energy transformations. Don’t fear if that sounds complicated; we’ll see it in motion quickly !

Let’s get hands-on! We’ll use Python to calculate skewness and visualize it. For this tutorial, you’ll want the next libraries:

- numpy : For numerical operations.

- pandas : For knowledge dealing with.

- scipy : To calculate skewness.

- matplotlib and seaborn : For plotting.

Should you don’t have them put in, run this in your terminal :

pip set up numpy pandas scipy matplotlib seaborn

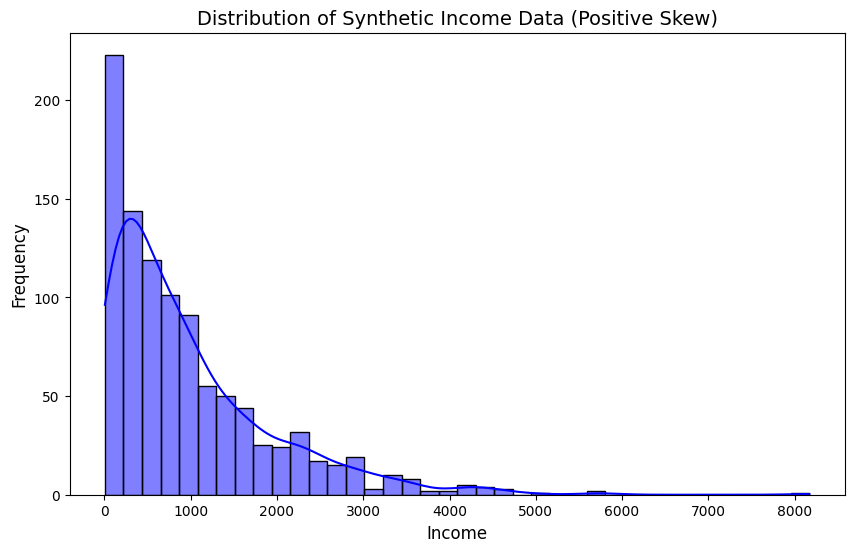

Let’s create a positively skewed dataset (simulating earnings) and analyze it.

# Import libraries

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

from scipy.stats import skew# Set random seed for reproducibility

np.random.seed(42)

# Generate a positively skewed dataset (income-like)

knowledge = np.random.exponential(scale=1000, measurement=1000) # Exponential distribution is of course skewed

# Convert to a Pandas Collection for simpler dealing with

data_series = pd.Collection(knowledge)

# Calculate skewness

skewness = skew(data_series)

print(f"Skewness of the dataset: {skewness:.3f}")

# Plot the distribution

plt.determine(figsize=(10, 6))

sns.histplot(data_series, kde=True, shade='blue')

plt.title('Distribution of Artificial Revenue Information (Optimistic Skew)', fontsize=14)

plt.xlabel('Revenue', fontsize=12)

plt.ylabel('Frequency', fontsize=12)

plt.present()

Output Rationalization:

- The skewness worth shall be optimistic (e.g., round 2.0), confirming a right-skewed distribution.

- The histogram will present a protracted tail on the best, typical of earnings knowledge.

One widespread solution to cut back skewness is by making use of a log transformation. This compresses giant values and spreads out smaller ones, making the distribution extra symmetric. Let’s strive it !

# Apply log transformation (add 1 to keep away from log(0) errors)

log_data = np.log1p(data_series)# Calculate new skewness

log_skewness = skew(log_data)

print(f"Skewness after log transformation: {log_skewness:.3f}")

# Plot the remodeled distribution

plt.determine(figsize=(10, 6))

sns.histplot(log_data, kde=True, shade='inexperienced')

plt.title('Distribution After Log Transformation (Diminished Skew)', fontsize=14)

plt.xlabel('Log(Revenue)', fontsize=12)

plt.ylabel('Frequency', fontsize=12)

plt.present()

Output Rationalization:

- The skewness worth will drop considerably (nearer to 0), indicating a extra symmetric distribution.

- The histogram will look extra bell-shaped — nearer to a standard distribution.

Think about you’re constructing a mannequin to foretell home costs. The “value” column in your dataset is commonly positively skewed as a result of just a few homes are extraordinarily costly. Should you feed this skewed knowledge immediately right into a linear regression mannequin, the predictions is likely to be off. By making use of a log transformation (as we did above), you may normalize the info, enhancing the mannequin’s accuracy.

Right here’s a fast guidelines for coping with skewness:

- Examine Skewness: Use skew() to measure it.

- Visualize: Plot histograms or KDEs to verify.

- Remodel: Apply log, sq. root, or Field-Cox transformations primarily based on the skew kind.

- Validate: Re-check skewness and distribution after transformation.

- Skewness measures the asymmetry of your knowledge.

- Optimistic skew has a protracted proper tail; unfavourable skew has a protracted left tail.

- Many ML fashions want symmetric knowledge, so decreasing skewness is a key preprocessing step.

- Python libraries like scipy and seaborn make it straightforward to research and visualize skewness.

Congratulations on making it by way of this tutorial from Codes With Pankaj Chouhan ! Now that you simply perceive skewness, strive experimenting with different datasets (e.g., from Kaggle) and transformations like sq. root or Field-Cox. Within the subsequent tutorial on www.codeswithpankaj.com, we’ll discover learn how to deal with lacking knowledge in machine studying — one other important ability for newcomers.

Have questions or suggestions? Drop a remark under or join with me on my web site. Comfortable coding!

Pankaj Chouhan