Traditionally, robotic duties had been carried out by architecting a bespoke stack of modules, tuned particularly to the particular wants and sensor suite out there. This modular method was an interesting place to begin because it supplied brief time period organizational velocity enhancements and interpretability on the interfaces of the modules, however show to be brittle because of lossy interfaces and compounding errors and unmaintainable as a result of customized and sophisticated system options required for every new problem.

As AI has superior and the capabilities of fashions grown, robotic duties not require customized stack options and as a substitute could be designed end-to-end, immediately studying the duty straight from sensing. It is a win-win, because it simplifies and removes entropy whereas enabling the mannequin to study one of the best illustration for the duty with out lossy interfaces.

This doc serves to (i) outline a generic robotic process framework which could be designed to sort out any process; (ii) outline out there choices for every subcomponent; and (iii) outline particular instantiations for 2 totally different related robotic duties — notion and coverage studying.

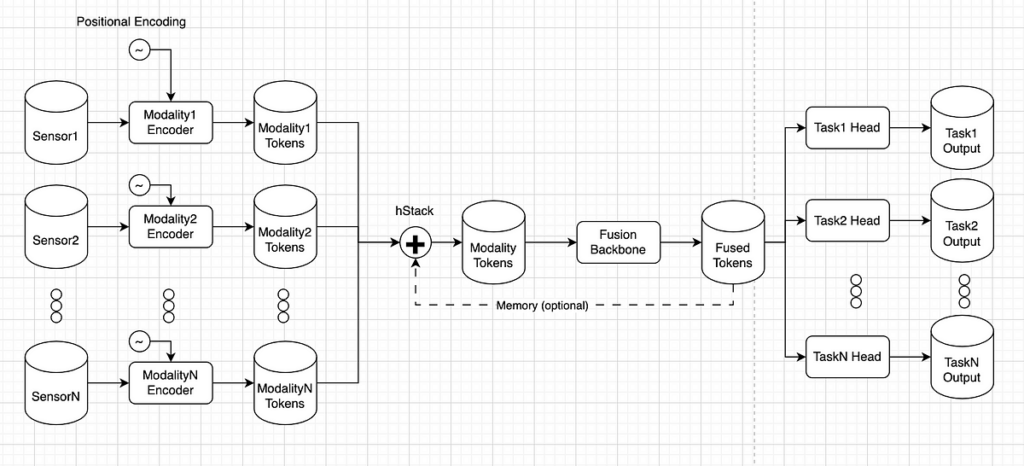

There are 4 main interfaces:

- Sensing inputs: This may be from any modality, together with cameras, lidars, radars, pose / robotic state, robotic ID (for cross embodiment issues), priors (comparable to maps), and so forth.

- Modality tokens: These are vectors in a excessive dimensional house which “describe” the a area on this modality. For instance if the token belongs to a digital camera, it might encode a selected patch of the picture, contextualized by the neighboring tokens with which it has interacted (oh! I see a head and my neighbor sees a torso. Maybe an individual is right here…).

- Fused tokens: These are once more vectors in a excessive dimensional house which “describe” the scene in a manner that’s significant to the duty heads, contributed to by all modalities. For instance if the duty is to detect an object, the vector could encoder some descriptor of blobs in house, their location and geometry.

- Process outputs: Self-explanatory, these are the outputs for the duty heads. These are supervised by your information and will ideally match the outcomes of your coaching targets carefully.

There are three main items, the modality-specific encoders, the fusion spine, process heads.

- Modality Encoders: These take sensing inputs and compute modality tokens in a shared house describing the sensors. For modalities with out a lot construction, comparable to robotic pose or embodiment, we are able to use one thing like an MLP to challenge into shared embedding house. For structured sensors comparable to lidars and cameras we are able to contemplate ViTs or ResNet. For language we are able to contemplate vanilla transformers.

- Fusion Spine: This takes the hstacked modality particular tokens in a shared house and computes fused tokens which might be multi-modality conscious. For smaller purposes the place we worth latency and compute footprint over basic world understanding (e.g. some slim notion duties) we are able to contemplate CNNs comparable to a UNet to fuse semantic and localized options, in instances the place basic world understanding is effective (basic function robotics), we contemplate leveraging present pretrained LLM / VLM backbones.

- Process Heads: This subcomponent takes the fused tokens and makes predictions for the specified process. For detection / monitoring / segmentation duties suppose CNN detection heads or DETR type architectures, and so forth. For discrete coverage studying suppose autoregressive LLMs transformer heads, or fused token conditioned MLPs or diffusion heads for motion regression.

We glance to literature to use this framework. I be aware two areas with which I’m acquainted however this is applicable broadly.

3D Notion

Right here we see the above framework getting used for 3d notion duties (obj detection and segmentation).

- Modality Encoders: For digital camera Swin-T is used (a kind of ViT), VoxelNet is used for lidar encoding. As a result of a CNN primarily based spine is used we should place these embeddings in a shared house (not strictly needed for consideration, since connections don’t rely upon spacial location). To perform this depth is predicted in cameras and options are scattered to a BEV illustration.

- Fusion Spine: FPN is used to mix semantically and positionally wealthy options for performing arbitrary notion duties.

- Process Heads: 3d object detection and segmentation heads. Easy CNN primarily based modules that are supervised immediately with human labeled information.

Different instance papers which use this framework: BEVFormer.

Coverage Studying

Right here we see the above framework getting used for coverage studying (predicting robotic motion to perform some process).

- Modality Encoders: Since we solely have cameras right here we use a easy ViT per digital camera.

- Fusion Spine: As a result of this software requires basic world understanding, a pretrained VLM is used. Beneath the hood this appears to be like like an enormous transformer mannequin (~3B params).

- Process Heads: To be able to predict steady motion chunks a diffusion mannequin is used, conditioned on the fused embeddings and the projected motion chunks from earlier time stamps.

Different papers that use an analogous framework: Unified vision action.