On the earth of AI language fashions, larger isn’t at all times higher. Small Language Fashions (SLMs) are language fashions with far fewer parameters than the headline-grabbing GPT-4 or different Massive Language Fashions (LLMs). In contrast to large LLMs educated on trillion-token datasets to be basic problem-solvers, SLMs are sometimes designed for particular duties or domains. This focus makes them light-weight, environment friendly, and cost-effective for focused functions.

- Dimension and Pace: SLMs have fewer parameters, requiring much less reminiscence and compute energy. They’ll usually run on extraordinary {hardware} (typically even on a laptop computer or smartphone) and ship outcomes with low latency. In distinction, LLMs like GPT-4 demand heavy GPU servers and could also be slower as a consequence of their dimension.

- Effectivity and Price: SLMs eat much less power and sources, translating to decrease operational prices. Massive fashions not solely value extra to coach however every question to an LLM can incur vital compute time or API charges. SLMs are cheaper to coach, deploy, and run at scale.

- Deployment Flexibility: SLMs are simpler to deploy throughout numerous environments. They are often embedded in edge units, run offline, and combine into merchandise with out large infrastructure overhauls.

- Area Experience: An SLM might be fine-tuned on particular datasets to turn out to be a specialist in fields like drugs, authorized paperwork, or food-related queries. This specialization improves accuracy in slender domains, whereas LLMs might battle with context-specific vocabulary.

Fashions like DistilBERT or MiniLM are well-known SLMs — primarily distilled or compressed variations of bigger fashions that retain robust efficiency on particular duties with a fraction of the parameters. Newer fashions (e.g., Meta’s LLaMA 3B, Microsoft’s Phi-3 mini, Mistral 7B) show that even a number of billion parameters can obtain spectacular outcomes.

For instance how SLMs can resolve real-world issues, let’s look at a meals supply app state of affairs. Think about a meals app (like Uber Eats or DoorDash) the place customers sometimes enter particular key phrases like “pizza” or “sushi” within the search bar. This conventional keyword-based search works effectively when customers know precisely what they need however struggles with complicated or obscure queries.

A consumer varieties: “Inform me a delicacy close to me.”

A standard key phrase search might battle to outline “delicacy,” however an SLM-powered strategy can interpret the intent behind the query. An SLM fine-tuned for meals queries can:

- Acknowledge that “delicacy close to me” implies a request for a highly-rated or regionally standard dish.

- Use location knowledge to search out native specialties.

- Return significant suggestions like: “How about attempting the well-known Pune specialty Misal Pav at XYZ eatery? It’s a highly-rated native delicacy close to you.”

Characteristic Conventional Search SLM-Powered Search Question Understanding Depends on actual key phrase matches Understands consumer intent Outcomes Restricted if key phrases don’t match database Dynamically suggests related objects Person Expertise Requires exact enter Permits pure, conversational queries Native Adaptation Restricted Can suggest regional specialties

An actual-world instance: Swiggy improved search by utilizing a small language mannequin to deal with complicated queries. When customers looked for “wholesome juice for summer season,” the mannequin advised Sugarcane juice with lemon and ginger — a related consequence though the search time period didn’t straight match a menu merchandise.

To energy a sensible meals search, a light-weight transformer mannequin like DistilBERT, MiniLM, or Sentence-Transformers (e.g., all-MiniLM-L6-v2) can be utilized. The method includes:

- Gathering Information: Utilizing historic search logs and manually crafted query-to-item pairs.

- Tremendous-Tuning the Mannequin: Coaching the mannequin on related meals knowledge.

- Validation & Iteration: Testing and refining primarily based on efficiency.

- Embeddings Index: Convert meals objects into numeric vectors and retailer them in an index.

- Question Processing: Convert consumer queries into embeddings utilizing the SLM.

- Similarity Matching: Evaluate question embeddings with meals merchandise embeddings to search out the closest match.

- Outcomes Meeting: Show probably the most related meals objects.

Pattern Python Code for Question Matching

from transformers import AutoTokenizer, AutoModel

import torch# Load a pre-trained small language mannequin for sentence embeddings

model_name = "sentence-transformers/all-MiniLM-L6-v2"

tokenizer = AutoTokenizer.from_pretrained(model_name)

mannequin = AutoModel.from_pretrained(model_name)

# Perform to get embedding from the mannequin

def get_embedding(textual content):

tokens = tokenizer(textual content, return_tensors='pt')

outputs = mannequin(**tokens)

# Imply-pool the token embeddings to get a sentence embedding

embedding = outputs.last_hidden_state.imply(dim=1)

return embedding.detach()

# Instance listing of meals merchandise names (in follow these may very well be dish descriptions or mixed "dish @ restaurant" strings)

food_items = [

"Sugarcane juice with lemon and ginger",

"Paneer Lababdar (Punjabi curry)",

"Margherita Pizza",

"Vegan Avocado Salad"

]

# Precompute merchandise embeddings

item_embeddings = [get_embedding(item) for item in food_items]

# Incoming consumer question

question = "wholesome juice for summer season"

query_emb = get_embedding(question)

# Compute similarities and discover finest match

similarities = [torch.cosine_similarity(query_emb, item_emb, dim=1) for item_emb in item_embeddings]

best_match_index = torch.argmax(torch.stack(similarities))

print(f"Person question: '{question}' -> Prime match: {food_items[best_match_index]}")

Price Issue SLM LLM Infrastructure Can run on CPU Requires GPUs Question Price

Utilizing an SLM for search as an alternative of an LLM can scale back prices by over 100x, making it viable for startups and high-volume functions.

- E-commerce: Product search & suggestions.

- Buyer Assist: Chatbots educated on FAQs.

- Native Guides: Personalised journey & restaurant suggestions.

- Authorized & Medical: Business-specific doc evaluation.

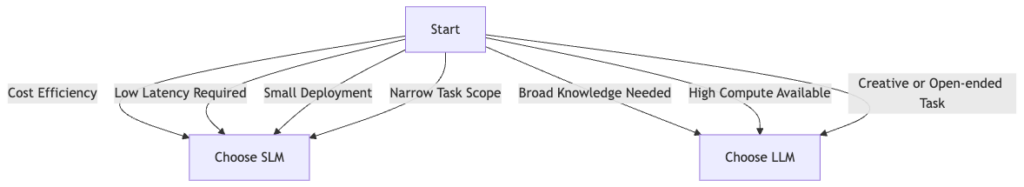

Use an SLM when:

- The area is well-defined (e.g., meals search, buyer help, product suggestions).

- Price and latency matter (e.g., real-time responses, budget-sensitive functions).

- Deployment flexibility and knowledge privateness are priorities.

Use an LLM when:

- The duty requires broad, open-ended reasoning.

- The finances permits for prime compute prices.

- Coaching knowledge is restricted, and also you depend on built-in information.

SLMs are proving that larger isn’t at all times higher. For a lot of real-world functions, a small mannequin is the smarter alternative — delivering effectivity, value financial savings, and area experience with out the heavyweight infrastructure. Companies trying to undertake AI with out breaking the financial institution ought to think about leveraging the ability of small however mighty language fashions.