Welcome! That is my first put up, in what I hope to turn into a collection, the place I re-implement machine studying papers to apply PyTorch and Machine Studying fundamentals in addition to acquire deep understanding on core papers which have formed the sector of Machine Studying as we all know at this time!

As that is my first time re-implementing a paper, I believed it will be useful to put in writing a newbie’s-perspective information / expertise report for others like me who need to re-implement papers however don’t know the place to begin. I hope this information serves as a delicate introduction to ViT and units you upto re-implement different papers sooner or later simply! Come be part of me on this journey of replicating ML papers!

In recent times, the transformer structure has revolutionized pure language processing (NLP). With the introduction of the Imaginative and prescient Transformer (ViT), the identical structure started making waves within the discipline of laptop imaginative and prescient. ViT marked a paradigm shift from convolutional neural networks (CNNs) to transformer-based fashions for picture classification and different imaginative and prescient duties. Some well-known examples embody DEtection-TRansformer (DETR) for object detection similar to YOLO and Phase-Something Mannequin (SAM) 2 for zero-shot picture segmentation, each of which combine ViT into their architectures, exhibiting how integral ViT has turn into in fashionable Laptop Imaginative and prescient analysis!

ViT vs. CNN Paradigm

Beforehand, Convolutional Neural Networks (CNNs) had been the de-facto normal for laptop imaginative and prescient duties, excelling at effectively capturing spatial hierarchies inside photos by way of convolutional filters. Nonetheless, they’ve a “locality” constraint: mounted dimension receptive fields, inductive biases, and issue with long-range dependencies trigger CNNs to prioritize native options. This implies they’ve issue connecting distant elements of a picture (ex. left and proper corners) and capturing the “international context” of a picture.

ViT is the primary instance of utilizing a pure Transformer structure, configured for laptop imaginative and prescient duties, versus a CNN-based one. Consequently, it excels at capturing the “international context” of a picture and parallelization by way of self-attention for higher efficiency and effectivity in comparison with CNNs.

At a excessive stage: you’ll be able to form of consider ViT as taking a chicken’s-eye view strategy versus CNNs which scan over segments of the picture individually capturing finer particulars like edges and curves.

To assist visualize how CNNs behave, here is a good on-line instrument that explains CNNs step-by-step!

Core elements of ViT

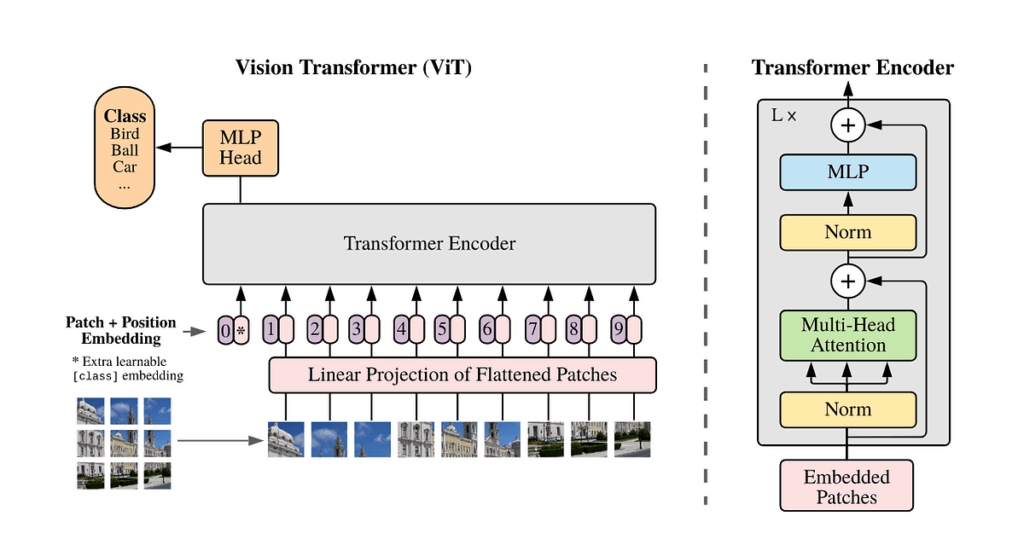

With a view to make the most of the Transformer structure, ViT treats the enter picture as a sequence of “patches”. The primary thought is that every one patches “attend” to one another, that means all of them present contextual relationships to one another. We are going to go into how “consideration” works at creating these inter-dependencies later on this information.

- *Word: ViT solely makes use of the encoder block of the Transformer block to remodel sequence of picture patches into contextualized representations**

Primary parts (check with the ViT structure diagram above):

- Patch Embedding— enter picture is cut up into patches and linearly projected right into a learnable embedding together with a 1D positional encoding. A class (CLS) token is appended to the sequence to “attend” to all different picture patches and seize the “international context” of the picture

- Transformer Encoder — collection of encoder blocks consisting of LayerNorm (LN), Multi-head Self Consideration (MSA), and Multi-layer notion (MLP) layers.

- MLP classification head — the final MLP layer that processes the CLS token with “international context” for classification

These parts are represented within the equations right here:

Don’t fear if the equations trying daunting at first! They did to me as nicely. We are going to break down the main points of every half and equation as we step via the code, so with out additional let’s create ViT from scratch!

*Word: I primarily used these two sources as reference: https://www.learnpytorch.io/08_pytorch_paper_replicating/#9-setting-up-training-code-for-our-vit-model [3] and https://medium.com/correll-lab/building-a-vision-transformer-model-from-scratch-a3054f707cc6 [4]. I’d extremely recommend studying them as nicely!*

There are completely different variations of ViT with completely different hyperparameters to extend/lower parameters as a computation vs. accuracy tradeoff. To make issues easy, we might be implementing ViT-Base. Extra particulars in Desk 1 of the ViT paper [1].

Dataset + Classification Downside

For background, we might be utilizing a easy toy dataset of 224 x 224 RGB photos of pizza, sushi, and steak. We are able to say our enter is of form (3, 224, 224) following this format (# of enter channels, peak, and width).

Hyperparameters

# HyperparametersIMG_SIZE = 224

BATCH_SIZE = 32 # 4096 within the paper

HEIGHT, WIDTH = 224, 224 # (H, W)

IN_CHANNELS = 3 # (C)

PATCH_SIZE = 16 # (P)

NUM_OF_PATCHES = (HEIGHT * WIDTH) // PATCH_SIZE**2 # (N)

EMBEDDING_SIZE = PATCH_SIZE**2 * IN_CHANNELS # (D)

# N, 768 -> (N, P**2 * C)

MLP_SIZE = 3072

HEADS = 12

NUM_OF_ENCODER_LAYERS = 12

MLP_DROPOUT = 0.1

EMBEDDING_DROPOUT = 0.1

# Coaching

EPOCHS = 10 # 300 on ImageNet-1K

LR=3e-3 # LR from Desk 3 for ViT-* ImageNet-1k

BETAS=(0.9, 0.999) # default Adam values but additionally talked about in ViT paper part 4.1 (Coaching & Positive-tuning)

WEIGHT_DECAY=0.3 # from the ViT paper part 4.1 (Coaching & Positive-tuning) and Desk 3 for ViT-* ImageNet-1k

Since ViT is a pre-trained mannequin, we don’t have the identical computational sources as Google and can’t absolutely replicate the ViT coaching to the tee. Consequently, we scale back the epochs to 10 and batch dimension to 32 photos.

Eis for patch and place embedding weight matricesNis # of patchesDis # of enter dims to encoder (E is linear projection to D)Pis patch dim. andCis # of enter channels

*Forewarning, this half is the toughest to grasp and implement, no less than for me, however on the brilliant facet, the next steps are a lot simpler to implement!**

Patch Embedding

First, we are going to “patchify” the picture.

permuted_image = picture.permute(1,2,0) # (H, W, C) for matplot# Create a collection of subplots

fig, axs = plt.subplots(nrows=IMG_SIZE // PATCH_SIZE,

ncols=IMG_SIZE // PATCH_SIZE)

for i, patch_height in enumerate(vary(0, HEIGHT, PATCH_SIZE)):

for j, patch_width in enumerate(vary(0, WIDTH, PATCH_SIZE)):

axs[i, j].imshow(permuted_image[patch_height:patch_height+PATCH_SIZE,

patch_width:patch_width+PATCH_SIZE,

:])

axs[i, j].axis('off')

# print(HEIGHT, PATCH_SIZE, i, j)

Then, we linear venture it into an embedding dimension D, which is fancy speak for taking a patch and its channels’ pixels and changing it to the embedding dimension through a learnable transformation. Intuitively, it’s as if we flatten the entire patch and channel pixels and fed it by way of a MLP layer that outputs a brand new vector of Ddimensions.

In our case, we are going to merely flatten the 2D patches:

The place N is the # of patches and D is the embedding dimension. H*W / P² represents dividing the picture into patches, and P² * C represents # of pixels per patch.

A easy solution to implement the linear projection is by utilizing a Conv2d layer. Utilizing a kernel dimension and stride equal to the patch dimension robotically divides the picture into patches.

linear_projection = nn.Conv2d(in_channels=3,

out_channels=EMBEDDING_SIZE,

kernel_size=PATCH_SIZE,

stride=PATCH_SIZE) # (D, P, P)

Then, we flatten the 2D patches.

self.flatten = nn.Flatten(start_dim=2, end_dim=3) # (D, N)

Lastly, we reshape to get (N, D) embedding vector.

embedding = embedding.permute(0, 2, 1) # (N, D)

The tensor operations are fairly troublesome to understand at first so undoubtedly spend a while to grasp every step. It helped me to floor myself and assume “on the finish of the day, its only a regular feed ahead Neural Community” Utilizing Conv2d can be a extra compact solution to carry out this step somewhat than creating the patches utilizing slicing.

Now we have now gone from (3, 224, 224) to (196, 768) (196 patches and 768 embedding dimension, derived from the earlier linear projection equation)

CLS Token

We merely prepend the category embedding vector to the sequence. Now, we have now (197, 768) for our sequence of patch embedding vectors.

embedding_and_class_token = torch.concat((class_token, embedding), dim=1) # (N+1, D)

Positional Embedding

Then, we add a learnable positional encoding to the sequence. There are lots of methods to implement this and is an lively analysis matter. For simplicity, we use a learnable random tensor.

positional_embedding = torch.rand(1, NUM_OF_PATCHES + 1, EMBEDDING_SIZE)

patch_embedding = embedding_and_class_token + positional_embedding # (N+1, D)

Patch Embedding Layer

Placing all of it collectively, we will create a Patch Embedding layer, which we are going to mix with different primary parts in our full ViT implementation.

class PatchEmbedding(nn.Module):

"""Patch Embedding layer1. Linear tasks to embedding house

2. Appends Class Token

3. Provides Positional Embedding

"""

def __init__(self, HEIGHT, WIDTH, IN_CHANNELS, PATCH_SIZE):

tremendous().__init__()

assert HEIGHT % PATCH_SIZE == 0 and WIDTH % PATCH_SIZE == 0, "Patch dimension would not evenly divide picture dims"

self.num_of_patches = (HEIGHT * WIDTH) // PATCH_SIZE**2 # N

self.embedding_size = PATCH_SIZE**2 * IN_CHANNELS # D

self.linear_projection = nn.Conv2d(in_channels=3,

out_channels=self.embedding_size,

kernel_size=PATCH_SIZE, # P

stride=PATCH_SIZE) # P

self.flatten = nn.Flatten(start_dim=2, end_dim=3)

# torch.nn layers should be in constructor as object vars to be tracked in abstract

self.class_token = nn.Parameter(torch.randn(1, 1, self.embedding_size),

requires_grad=True)

self.positional_embedding = nn.Parameter(torch.rand(1, self.num_of_patches + 1, self.embedding_size),

requires_grad=True)

def ahead(self, x): # (B, H, W, C)

B = x.form[0]

# learnable tensors

class_token = self.class_token.broaden(B, -1, -1)

# linear projection

x = self.linear_projection(x) # B, D, P, P

x = self.flatten(x)

x = x.permute(0, 2, 1) # B, N, D

# concat class token

x = torch.concat((class_token, x), dim=1) # B, N + 1, D

# add positional embedding (addition is broadcasted throughout batch dimension)

x = x + self.positional_embedding # B, N + 1, D

return x # B, N + 1, D

That is arguably probably the most important a part of ViT because it makes the picture patches “consideration”-aware. With all of the patches attending to one another, the CLS token “attends” to all different patches to achieve international context.

The primary elements embody:

- LN — layer norm regularizes throughout the options of a single information level in contrast to batch norm, enabling parallelization (extra within the Layer Norm paper [5])

# LN

ln = nn.LayerNorm(normalized_shape=EMBEDDING_SIZE)

ln_patch_embedding = ln(patch_embedding)

- MSA — multi-head self consideration layer repeats for a number of “heads” (extra particulars within the unique Transformer paper [6])

# MSA

msa = nn.MultiheadAttention(EMBEDDING_SIZE, HEADS, dropout=0)

msa_patch_embedding, _ = msa(ln_patch_embedding, ln_patch_embedding, ln_patch_embedding) # Q, Ok, V similar for self consideration

z'— the output of 1 layer- The

z_l-1represents the output from the earlier layer as a residual connection to assist with gradient circulate.

Now combining every half collectively!

class MSA_Block(nn.Module):def __init__(self, EMBEDDING_SIZE, NUM_OF_HEADS, DROPOUT):

tremendous().__init__()

self.ln = nn.LayerNorm(normalized_shape=EMBEDDING_SIZE)

self.msa = nn.MultiheadAttention(EMBEDDING_SIZE, NUM_OF_HEADS, DROPOUT, batch_first=True)

def ahead(self, x):

x = self.ln(x)

attn_output, _ = self.msa(x, x, x)

return attn_output # omit residual for Encoder block

This block is much like MSA block however with an MLP as a substitute. It has a single hidden layer with dimension managed by the MLP_SIZE hyperparameter with a GELU non-linear activation operate (part 3.1 of the ViT paper [1]) in between the 2 layers. The paper additionally applies dropout after every Linear layer (Appendix B. of the ViT paper [1])

class MLP_Block(nn.Module):def __init__(self, EMBEDDING_SIZE, MLP_SIZE, MLP_DROPOUT):

tremendous().__init__()

self.ln = nn.LayerNorm(EMBEDDING_SIZE)

self.mlp = nn.Sequential(

nn.Linear(EMBEDDING_SIZE, MLP_SIZE),

nn.GELU(),

nn.Dropout(MLP_DROPOUT),

nn.Linear(MLP_SIZE, EMBEDDING_SIZE),

nn.Dropout(MLP_DROPOUT)

)

def ahead(self, x):

return self.mlp(self.ln(x))

A key function to each the MLP and MSA blocks is that the output remains to be throughout the embedding dimension. Because the enter and output dimensions are the identical, we will stack a number of of those “encoder” blocks collectively, repeating the method making use of self-attention hierarchically, which is vital for ViT to grasp the long-range dependencies throughout the picture.

I briefly talked about that completely different variations of ViT (ViT-Base, ViT-Massive, ViT Enormous) have kind of parameters as a tradeoff of computation and accuracy. Particularly, hyperparameters corresponding to MLP_SIZE , NUM_OF_LAYERS (num. of encoder blocks), NUM_OF_HEADS (in MSA), and EMBEDDING_SIZE differ between the fashions. Extra on this in Desk 1 of the ViT paper [1].

Combining the MSA and MLP blocks, we get…

class Transformer_Encoder_Block(nn.Module):def __init__(self, EMBEDDING_SIZE, NUM_OF_HEADS, MLP_SIZE, MLP_DROPOUT, ATTN_DROPOUT=0):

tremendous().__init__()

# eq 2

self.msa_block = MSA_Block(EMBEDDING_SIZE, NUM_OF_HEADS, ATTN_DROPOUT)

# eq 3

self.mlp_block = MLP_Block(EMBEDDING_SIZE, MLP_SIZE, MLP_DROPOUT)

def ahead(self, x):

x = self.msa_block(x) + x

x = self.mlp_block(x) + x

return x

Now that our patches have “attended” to at least one one other, we extract the CLS token z0_L , making use of Layer Norm as soon as extra earlier than passing it by way of a MLP classifier head. The output of this classifier head is a hyperparameter that may be configured for the # of goal lessons for our dataset.

This layer’s weights are the one ones not frozen for switch studying for finetuning ViT for downstream duties. Extra on that later.

# classifier head (Eq. 4)

self.classifier = nn.Sequential(

nn.LayerNorm(normalized_shape=EMBEDDING_SIZE),

nn.Linear(EMBEDDING_SIZE, NUM_OF_CLASSES)

)

Combining all the pieces from Eq. 1–4 collectively now, we will now construct the complete ViT mannequin! Now we have the entire constructing blocks, and now, we will simply put all of it collectively. Tremendous simple!

class MyViT(nn.Module):def __init__(self, HEIGHT, WIDTH, IN_CHANNELS, EMBEDDING_DROPOUT, PATCH_SIZE, NUM_OF_HEADS,

NUM_OF_ENCODER_LAYERS, MLP_SIZE, MLP_DROPOUT, NUM_OF_CLASSES, ATTN_DROPOUT = 0):

tremendous().__init__()

self.NUM_OF_PATCHES = (HEIGHT * WIDTH) // PATCH_SIZE**2 # (N)

self.EMBEDDING_SIZE = PATCH_SIZE**2 * IN_CHANNELS # (D)

assert HEIGHT % PATCH_SIZE == 0 and WIDTH % PATCH_SIZE == 0, "Patch dimension would not evenly divide picture dims"

# Eq. 1

self.patch_embedding = PatchEmbedding(HEIGHT, WIDTH, IN_CHANNELS, PATCH_SIZE)

self.embedding_dropout = nn.Dropout(EMBEDDING_DROPOUT)

# Eq. 2 and three

self.encoder = nn.Sequential(

*[Transformer_Encoder_Block(EMBEDDING_SIZE, NUM_OF_HEADS, MLP_SIZE, MLP_DROPOUT, ATTN_DROPOUT)

for _ in range(NUM_OF_ENCODER_LAYERS)]

)

# classifier head (Eq. 4)

self.classifier = nn.Sequential(

nn.LayerNorm(normalized_shape=EMBEDDING_SIZE),

nn.Linear(EMBEDDING_SIZE, NUM_OF_CLASSES)

)

def ahead(self, x): # B, C, H, W

x = self.patch_embedding(x) # B, N+1, D

x = self.embedding_dropout(x)

x = self.encoder(x) # B, N+1, D

x = self.classifier(x[:, 0]) # B, 1, D

return x

The one key variations are self.embedding_dropout , which is talked about in Appendix B.1 of the ViT paper [1], in addition to the collection of Encoder blocks parameterized by NUM_OF_ENCODER_LAYERS that kind the complete ViT Encoder block.

Going again to our unique toy dataset of pizza, sushi, and steak, let’s take a look at our new ViT mannequin on it!

First, let’s arrange our optimizer and loss operate. In keeping with the ViT paper [1], they used an Adam optimizer with studying price of 0.003, default beta values, and weight decay of 0.3 (on ViT-* for ImageNet-1K)

optimizer = torch.optim.Adam(params=vit.parameters(),

lr=LR,

betas=BETAS,

weight_decay=WEIGHT_DECAY)# for multi-class classification

loss_fn = torch.nn.CrossEntropyLoss()

Operating for 10 epochs, we get these outcomes.

Yikes! The accuracy is fairly unhealthy. Why is that this occurring?

Google has already extensively pre-trained ViT fashions on massive datasets like ImageNet, and it isn’t computationally possible for us to finish replicate their paper’s experiments.

The paper particulars that ViT and transformers are “data-hungry.” They want massive quantities of coaching information to realize excessive efficiency that surpasses CNN-based fashions. Regardless of this huge information dimension tradeoff, Transformers make up for this truth by its parallelization enabling quicker coaching and inference on GPU/TPU chips.

Due to this fact, to successfully make the most of ViT, we have to use switch studying and fine-tune ViT to our dataset. This basically means we are going to make the most of Google’s pre-trained weights for ViT, freeze most of them aside from the classifier head, after which prepare or “fine-tune” on our toy dataset. This permits us to protect the elemental consideration mechanisms and dependencies already discovered between patches and adapt “higher-level” weights for our particular drawback. This permits us to fine-tune our ViT utilizing much less computation and attaining larger accuracy! A win-win!

# 1. Get pretrained weights for ViT-Base

pretrained_vit_weights = torchvision.fashions.ViT_B_16_Weights.DEFAULT# 2. Setup a ViT mannequin occasion with pretrained weights

pretrained_vit = torchvision.fashions.vit_b_16(weights=pretrained_vit_weights).to(machine)

# 3. Freeze the bottom parameters

for parameter in pretrained_vit.parameters():

parameter.requires_grad = False

# 4. Exchange classifier head (these are unfrozen and trainable)

pretrained_vit.heads = nn.Linear(in_features=768, out_features=len(class_names)).to(machine)

Our outcomes converse for themselves.

We had been in a position to obtain larger accuracy utilizing the pretrained mannequin and fine-tuning as a substitute of coaching the entire ViT mannequin from scratch.

Good, we had been in a position to construct a ViT mannequin from scratch and fine-tuned it on an dataset attaining excessive accuracy! Though we didn’t use our unique mannequin for coaching and analysis and used pre-trained weights as a consequence of computation restraints, the method of re-implementing the ViT paper is taught us the vital key concepts behind this SOTA mannequin’s design giving us a deeper understanding of core fashionable ML ideas.

There is no such thing as a higher solution to study than by doing. With that, I hope you loved strolling by way of this information with me and are impressed to re-implement extra papers sooner or later!

Right here can be the complete annotated pocket book with the ViT code: https://github.com/tyleryy/Models-from-Scratch/blob/main/ViT/ViT_from_scratch.ipynb

[1] Dosovitskiy, Alexey, et al. “A picture is price 16×16 phrases: Transformers for picture recognition at scale.” arXiv preprint arXiv:2010.11929 (2020).

[2] Alzubaidi, L., Zhang, J., Humaidi, A.J. et al. Evaluate of deep studying: ideas, CNN architectures, challenges, purposes, future instructions. J Massive Information 8, 53 (2021). https://doi.org/10.1186/s40537-021-00444-8

[4] https://medium.com/correll-lab/building-a-vision-transformer-model-from-scratch-a3054f707cc6

[5] Ba, Jimmy Lei, Jamie Ryan Kiros, and Geoffrey E. Hinton. “Layer normalization.” arXiv preprint arXiv:1607.06450 (2016).

[6] Vaswani, Ashish, et al. “Consideration is all you want.” Advances in neural info processing techniques 30 (2017).