In relation to credit score scoring, the stakes are excessive. Predicting whether or not a buyer will repay a mortgage isn’t only a enjoyable classification downside, it’s a business-critical process that impacts income, buyer expertise, and threat administration.

On this information, we’ll evaluate three highly effective gradient boosting fashions:

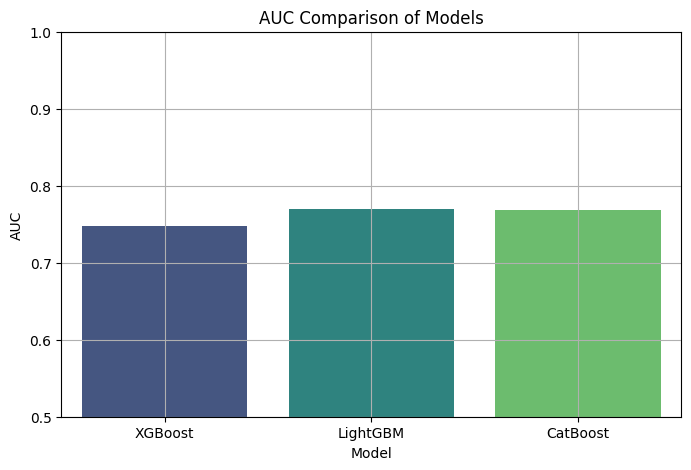

We’ll use a real-world credit score dataset, carry out hyperparameter tuning, deal with class imbalance with SMOTE, and consider the fashions utilizing AUC, recall, precision, and F1-score.

Let’s leap in. (Code included)

We’ll use the German Credit score Information from the UCI Machine Studying Repository. It’s been cleaned and hosted as a CSV by Selva Prabhakaran.

import pandas as pd

from sklearn.model_selection import train_test_split

from imblearn.over_sampling import SMOTEurl = "https://uncooked.githubusercontent.com/selva86/datasets/grasp/GermanCredit.csv"

df = pd.read_csv(url)

df_encoded = pd.get_dummies(df, drop_first=True)

# Clear column names (some fashions are choosy)

df_encoded.columns = [

"".join(c if c not in ['[', ']', ' for col in df_encoded.columns

]

X = df_encoded.drop("credit_risk", axis=1)

y = df_encoded["credit_risk"]

X_train…